The Deepfakes Analysis Unit (DAU) analysed a video that apparently shows India’s Finance Minister Nirmala Sitharaman promoting a financial investment platform, supposedly backed by the Indian government. After running the video through A.I. detection tools and getting our expert partners to weigh in, we were able to conclude that the video was fabricated using A.I.-generated audio.

A Facebook link to the two-minute-and-30-second video in English was escalated to the DAU by a fact-checking partner for analysis. However, the video is not available on the same link anymore. We don’t have evidence to suggest that this suspicious video originated from an account on Facebook or elsewhere.

Mrs. Sitharaman is captured in a medium close-up in the video. After the initial six seconds the video frame abruptly expands, making the backdrop relatively wider and capturing other portions of Sitharaman’s body including her arms. She appears to be seated on a chair and speaking into the camera; her hands seem to move in an animated manner in various frames.

The green stripe, white stripe, and Ashok Chakra of the Indian flag are partially visible in the video on the right as part of the backdrop. The rest of the backdrop comprises a white background with a wooden panel running below it, and a portion of the chair she seems to be seated on.

Bold static text in English with alphabets set in white against a dark green background border the top and bottom of the video frame. The text at the top reads “Thursday, November 27”; and the text at the bottom reads “Your personal registration link below the video”.

A female voice recorded in first person over Sitharaman’s video track announces in English that for anyone watching the video on “Thursday the 27th of November, this can be a turning point in your life”. It appeals to viewers to watch the video fully, and promises earnings worth “20 lakh rupees in just one month” based on what is revealed in the video.

The voice assures that “this message is not from a scammer” but from the person alleged to be behind the voice— “Nirmala Sitharaman, Finance Minister of India”. It goes on to say that, “I am not here to convince you or make fake promises” and that viewers can start earning “40,000 rupees in 24 hours”.

The voice adds that the supposed platform offers “steady income that goes straight to your account” and that there is “no risk, no hidden fee” and it’s “fully automatic”. It claims that Sitharaman supposedly “opened an account” with an investment of “22,000 rupees” and that her “balance” stands at “47 lakh rupees”.

The voice informs that the video will not magically make people rich but anyone looking for a “real legal stable source of income” is in the “right place” and should not “miss this moment”, urging them to take prompt action. It adds that the purpose of the video is not to “sell something” but to help “as many people as possible earn from this.”

The voice also claims that Sitharaman’s supposed partner in the project is apparently the “former director of a well-known bank” who knows how to “make profit even during a crisis”. It adds that the supposed person tested the “Quantum A.I.” platform with a small group and everyone earned over “5 lakh rupees in the first week in six months of testing” and “an average profit” of “20 lakh rupees”.

Another claim by the voice is that the supposed platform is an “official platform” “supported” by some “Central Bank” and “the government of India”. The viewers are directed to go to some “official site”—supposedly linked below the video—to access the “programme” that has apparently “changed thousands of lives”; and are assured of being contacted by Sitharaman’s manager after they register.

The overall video quality is good, however, Sitharaman’s mouth region looks a bit blurry. The synchronization between her lip movements and the audio track can still be discerned and seems decent with a slight lag in some frames. Her mouth seems to move in a puppet-like manner and her teeth appear blurred in some frames with the upper set not visible in several frames.

Her facial movements look very restricted and her eyes blink in an unnatural manner. As she appears to lift her arms in various frames, the bangles on her right arm appear to change, and the ring on her right ring finger seems to blur, disappear, and reappear in other frames.

In the last 30 seconds of the video the lower-third of the frame appears to wrinkle. The pattern on her saree seems to change; at specific moments where her hands appear to move in an animated manner, they oddly duplicate. It seems as if this lower portion and the rest of the video have been isolated and some digital alterations have been made only to the lower portion, which result in the visible oddities.

On comparing the voice attributed to Sitharaman with that heard in her recorded videos available online, similarities can be identified in the tone and pitch. The accent, however, sounds different, in fact, the pronunciation of “Nirmala Sitharaman” sounds very western. Overall, the delivery is very scripted, devoid of any intonation, and lacks pauses that are characteristic of human speech. The audio track is also accompanied by a faint echo-like sound throughout.

We undertook a reverse image search using screenshots from the video and traced Sitharaman’s visuals to this video published on Sep.15, 2025 from a YouTube channel associated with her. Sitharaman’s clothing in this video and the one we reviewed are identical; the backdrop is somewhat similar but not identical in the two videos as zoomed-in frames have been used in the video we traced with portions of the backdrop cropped out. Her body language is also not identical in the videos.

Sitharaman speaks in English in the video we traced, which has an echo-like sound similar to that heard in the video we reviewed but the audio tracks are different in both videos.

The visible wrinkle in the doctored video toward the end and the odd duplication of hands do not occur in the source video, in which she is wearing the same pair of bangles throughout and there are no rings on either of her hands.

Even as her hands appear to move in an animated manner in both the videos, there are only small clips that match with no single segment in the source video being the same duration as that of the doctored video. The source video duration is one-minute-and-18-seconds, and the doctored video is almost double that duration.

A wide white wall with a wooden panel below it and plants on two corners are clearly visible in the backdrop in the source video unlike the doctored video. Translucent white text in bold that reads “MODI STORY” appears in the bottom-left of the video frame. The same text emblazoned on a stylized logo depicting a steaming cup of tea is visible in the top-right corner of the frame. None of these elements are part of the doctored video.

The tone, packaging, and messaging in this video, including the suggestion of making an initial investment of 22,000 rupees, is similar to that repeatedly used in several A.I.-manipulated financial scam videos that we have debunked.

In many such videos authentic visuals of Sitharaman—sometimes stitched together with those of other people—have been used with synthetic audio to fabricate narratives about fraudulent investment schemes or platforms. “Quantum A.I.” is also a recurring name used across many of these videos, such as here and here, among others.

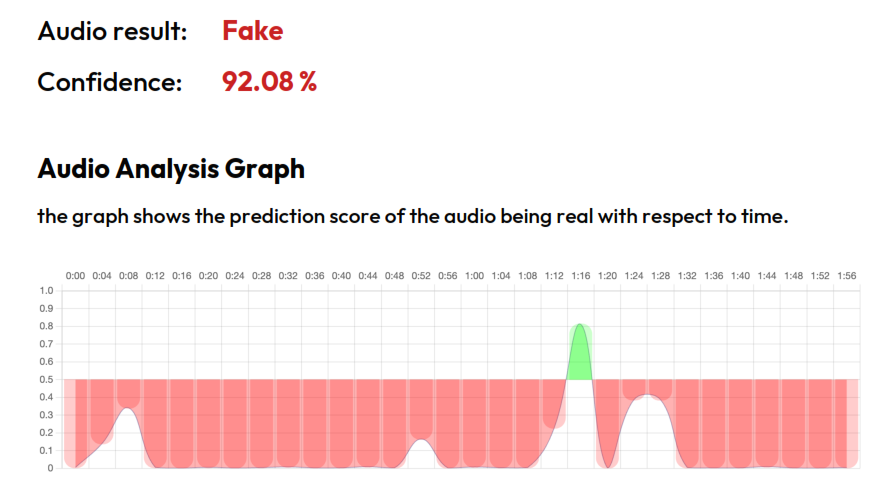

To discern the extent of A.I. manipulation in the video we reviewed, we put it through A.I. detection tools.

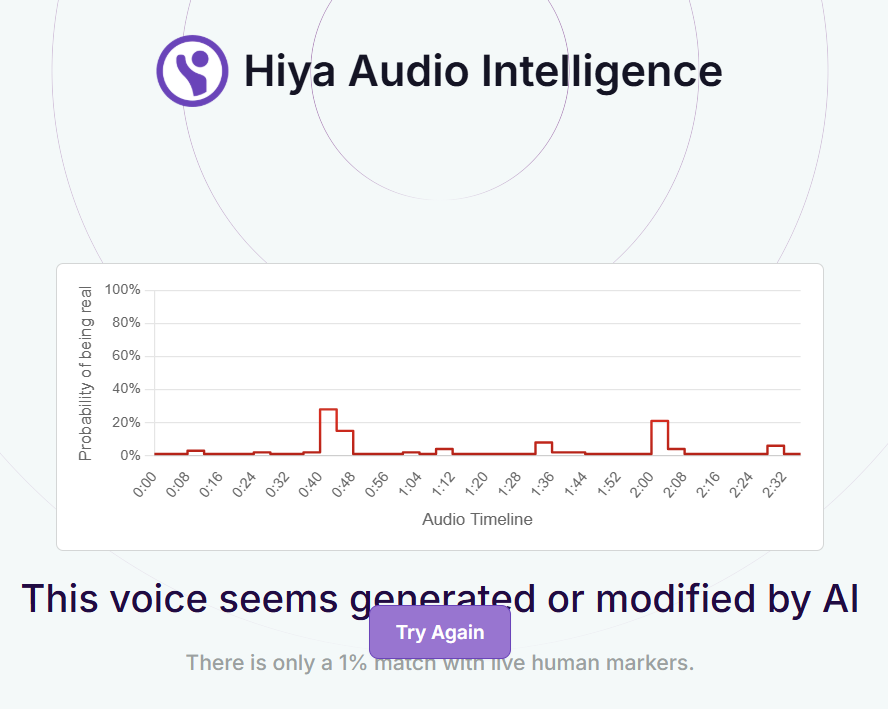

The voice tool of Hiya, a company that specialises in artificial intelligence solutions for voice safety, indicated that there is a 99 percent probability that the audio track in the video was generated or modified using A.I.

Hive AI’s deepfake video detection tool highlighted several markers of A.I. manipulation in the video track. Their audio detection tool indicated that the entire audio track is A.I.-generated.

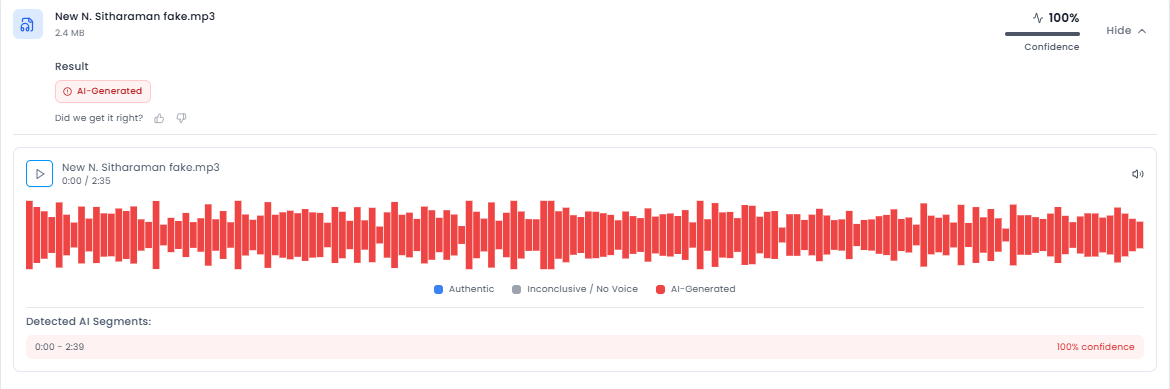

We ran the audio track through the advanced audio deepfake detection engine of Aurigin.ai, a Swiss deeptech company. The result indicated 100 percent confidence in the audio track being A.I.-generated.

We further put the audio track through the A.I. speech classifier of ElevenLabs, a company specialising in voice A.I. research and deployment. The results that returned indicated that there is a 48 percent probability that the audio was generated using their platform, categorising the overall result as “uncertain”. However, a further analysis by the team established that the audio track is synthetic or A.I.-generated.

To get an analysis on the video we reached out to Contrails AI, a Bangalore-based startup with its own A.I. tools for detection of audio and video spoofs.

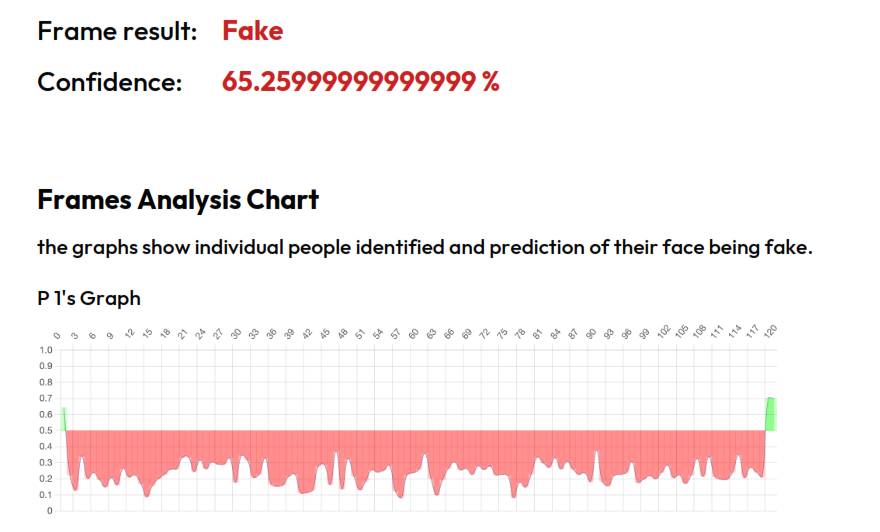

The team ran the video through audio and video detection models. The results that returned indicated signs of A.I.-generation in the audio track and A.I.-manipulation in the video track.

They stated that lip-sync or lip-reanimation technique was used to synchronise the lip movements of the speaker with the audio. They further noted that a voice cloning technique was used to create the audio track, which sounds similar to the real voice of the person seen in the video.

To get further expert analysis on the video, we escalated it to the Global Online Deepfake Detection System (GODDS), a detection system set up by Northwestern University’s Security & AI Lab (NSAIL). The video was analysed by two human analysts, run through 22 deepfake detection algorithms for video analysis and 70 deepfake detection algorithms audio analysis.

Of the 22 predictive models, two gave a higher probability of the video being fake and the remaining 20 gave a lower probability of the video being fake. Of the 70 predictive models, 10 gave a higher probability of the audio being fake, while the remaining 60 gave a lower probability of the audio being fake.

In their report, the team noted a specific moment in the video where the subject’s teeth unnaturally appear on top of her bottom lip. They mention that her teeth appear blurred throughout, something we too point to above.

The team also identified a timecode where the subject’s neck appears to overlap with her hand as she appears to fix her clothing. They further observed that her arms unnaturally disappear when overlapping with her clothing or seemingly duplicate throughout the video as we too noted above.

They highlighted that for a specific duration in the video the bottom portion of the subject’s torso seems disconnected from the top portion as she seems to breathe, adding that this appears as a visual discrepancy rather than a biological phenomenon.

They also corroborated our observation about the subject’s purported voice lacking natural tonal and cadence variations that are characteristic of human voices.

In conclusion, the team stated that the video is likely generated or manipulated via artificial intelligence.

On the basis of our observations and expert analyses, we can conclude that Sitharaman’s visuals were used with an A.I.-generated audio clip to peddle a false narrative about her promoting a financial investment platform.

(Written by Debopriya Bhattacharya and Debraj Sarkar, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.