The Deepfakes Analysis Unit (DAU) analysed a video that apparently shows Gen. Upendra Dwivedi, India’s Chief of Army Staff, chiding a supposed journalist, and claiming that U.S. President Donald Trump’s intervention led to de-escalation during Operation Sindoor. After putting the video through A.I. detection tools and getting our expert partners to weigh in, we were able to conclude that the video was manipulated with synthetic audio.

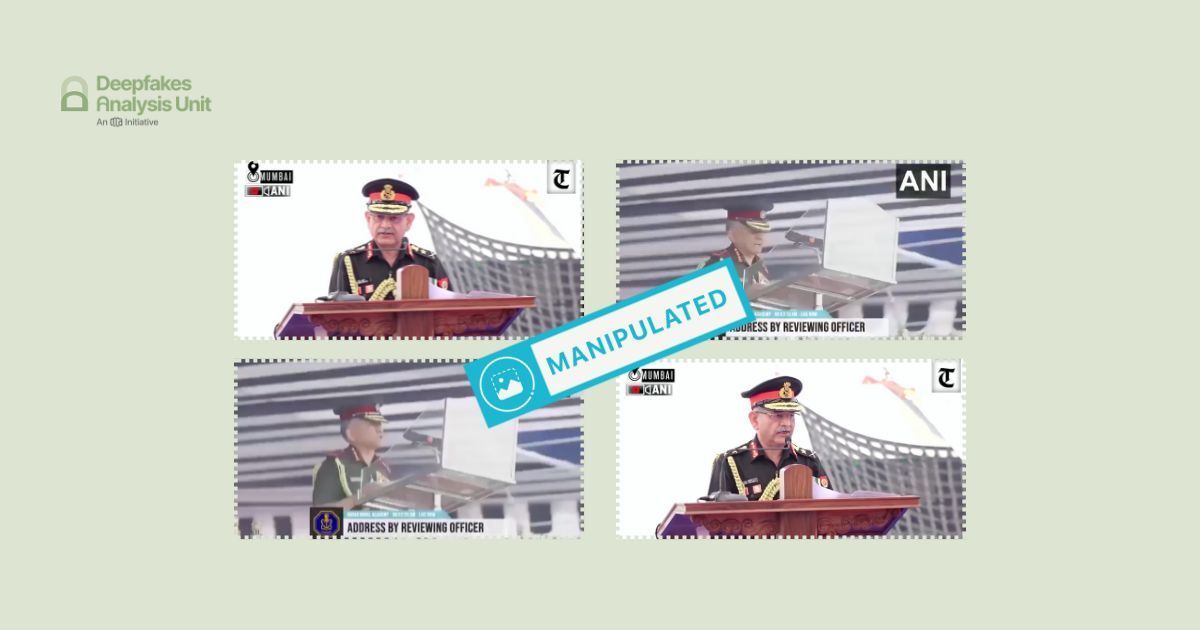

The video in English spanning one-minute and 53-seconds was discovered by the DAU during social media monitoring. It was embedded in a post on X, formerly Twitter, by an account with the display name of “Abbas Chandio” on Jan. 14. The display picture of the account shows a man seated in an office-like setting. The profile details indicate that the account is associated with “law & order, internal security” in Pakistan, which is also mentioned as the location of the account. A disclaimer by X below the video identifies it as “manipulated media”.

The text with the video, also in English, reads: “Zee News journalist holds up the mirror on ‘Operation Sindoor Continues’ more media stunt than reality. COAS can’t ignore it he nods to Trump’s intervention and Modi’s silent surrender. Worth a watch. #Iran#DigitalBlackoutIran” (sic).

We do not have any evidence to suggest if the video originated from any account on X or elsewhere.

The video opens with a wide shot of General Dwivedi apparently seated on a chair with the Indian Army logo on its headrest. The table in front of him seems to have the same logo; placed on it are sheets of paper, a miniature flag representing his office, and two microphones giving the impression that he is addressing an audience. He appears to be writing with a pen in hand while looking at something or someone to his right.

To the left of the video frame, another man also in the Indian Army uniform, appears to be standing behind a lectern with a logo etched on it and “anekshaw centre” visible below that.

A large greyish banner with “Indian Army” and “annual press conference - 2026” emblazoned in bold white letters, along with the Indian Army logo makes up most of the backdrop. The other elements in the backdrop are a black wall with symmetric vertical stripes; two banners with infographics about army operations are set against its corners.

Graphics in the top right corner of the video frame are a combination of letters in white set against red and bordered by yellow. A slightly tweaked arrangement of the same letters—“Ind” “Tod” “Digit”—is visible in the bottom right corner of the frame. Given the font and the colour scheme, similarity can be drawn with the logo used in the videos produced by India Today TV, an English news channel in India.

The audio track in the initial few seconds of the video doesn’t seem to be the voice of the two men visible in the frame. Instead it is a disembodied male voice posing questions to the general. The same voice continues until the video frame shifts from a wide shot to a split screen bordered on top by bold, static text graphics in English that read: “annual press conference”.

A close-up of the general appears in one window; and visuals of what seems like an audience seated in rows, comprising people in uniform as well as civilian clothing, appear in the other window. Camera crews with tripods; flags; and framed portraits hanging on a green wall are visible behind those people. A man who is part of that audience is purported to be behind that voice.

His face is partially hidden by another man in front of him but visible enough to discern that his lips appear to be moving. He seems to be holding a microphone in one hand with the other one moving in an animated manner.

The audience member is identified by the voice accompanying his visuals as “Anurag Sharma”, supposedly representing “Zee Media News”, which is also the name of an Indian media company. The voice asks about the “tangible outcomes” of “Operation Sindoor” and claims that “despite more than a year having passed”, “we see no outcome”.

The same voice states that, “this appears less like an effective and sustained military campaign”, and “more like a long term media exercise.” It further asks: “sir, don't you think that mere press conferences cannot change ground realities?” And asserts that, “it is results that make the real difference”.

The visuals then shift to the general captured in a medium close-up with a few audience cutaways in between. The male voice in this segment, also recorded in first person, is different from that associated with the man from the audience.

In a stern tone, the voice asks: “how do you expect us to effectively confront external challenges?”; “if you continue to sit in the media and publicly undermine your own country and its security forces”. It adds that, “national strength begins with internal responsibility”.

The voice notes that “Operation Sindoor is still ongoing” and that “we remain committed to securing the outcomes that are still pending”. It further claims that, “President Trump intervened and de-escalated the conflict”, but there wasn’t “lack of intent or effort on our part”; “and diplomatic interference caused our setback” or else “the situation” “could have escalated even to the nuclear level”. The video ends abruptly, the last 16 seconds are a black screen with no audio.

The overall video quality is poor. The lip movements of the general appear to synchronise with the audio track; the purported journalist’s audio and video track seem out of sync in the frames where his face is fully visible. The general’s teeth appear blurred and seem to lack definition. In one frame his lower lip appears to protrude unnaturally and some discoloration is visible around his mouth area. The supposed journalist’s visuals are especially poor and limited, making it difficult to analyse the shape and definition of his teeth.

We compared the voice attributed to the general in the video with his recorded speeches available online. The diction and accent sound similar, however, his voice is deeper; and the intonation and pauses that are characteristic of his delivery are not captured in the voice in the video, which also sounds scripted. There is a brief throat clearing sound in the general’s segment. We could not find any videos online of the supposed journalist in the video.

We undertook a reverse image search using screenshots from the video and traced this video published on Jan. 13, 2026 from the official YouTube channel of India Today.

The general’s clothing and that of the supposed journalist are identical in the video we traced and the one we reviewed. The respective backdrop and foreground for each of them is similar across the videos but not identical as cropped frames seem to have been used in the video we reviewed. The general’s body language is similar but not identical, however, that of the other man is exactly the same in both videos.

The video we traced features conversations between the general and journalists in both Hindi and English; the content is different from the video we reviewed. The supposed journalist introduces himself as someone working with Zee Media Group, his name is different from the one used in the video we reviewed. He poses a question to the general in Hindi and not English, as heard in the doctored video.

India Today logos are fully visible in the source video both at the bottom and top right corner of the video frame along with the official Indian Army logo, which is not part of the doctored video. The words on the lectern with the logo are also fully visible in the source video and read “Manekshaw centre”. The video footage attribution—“Courtesy: @ADGPIINDIANARMY”— is mentioned in tiny white letters in the top left corner of the frame; this element is missing from the doctored video.

The text graphic that reads “annual press conference” has been lifted as is from the source video and used in the doctored video. A sequence of wide shots, the frames with split screen, and audience cutaways have been lifted from the source video and stitched together to create the doctored video, which looks fairly seamless. Though the general’s body language appears less stiff in the source video compared to the doctored video.

The throat clearing sound attributed to the general in the doctored video is not heard in the source video, neither is the apparent protrusion of his lips and discolouration around his mouth area visible in the source video.

The video being addressed through this report is similar to several videos that the DAU has debunked in the wake of the India-Pakistan military escalation of May 2025. Most of these videos have been fabricated using synthetic audio tracks with video clips of top military officials or ministers.

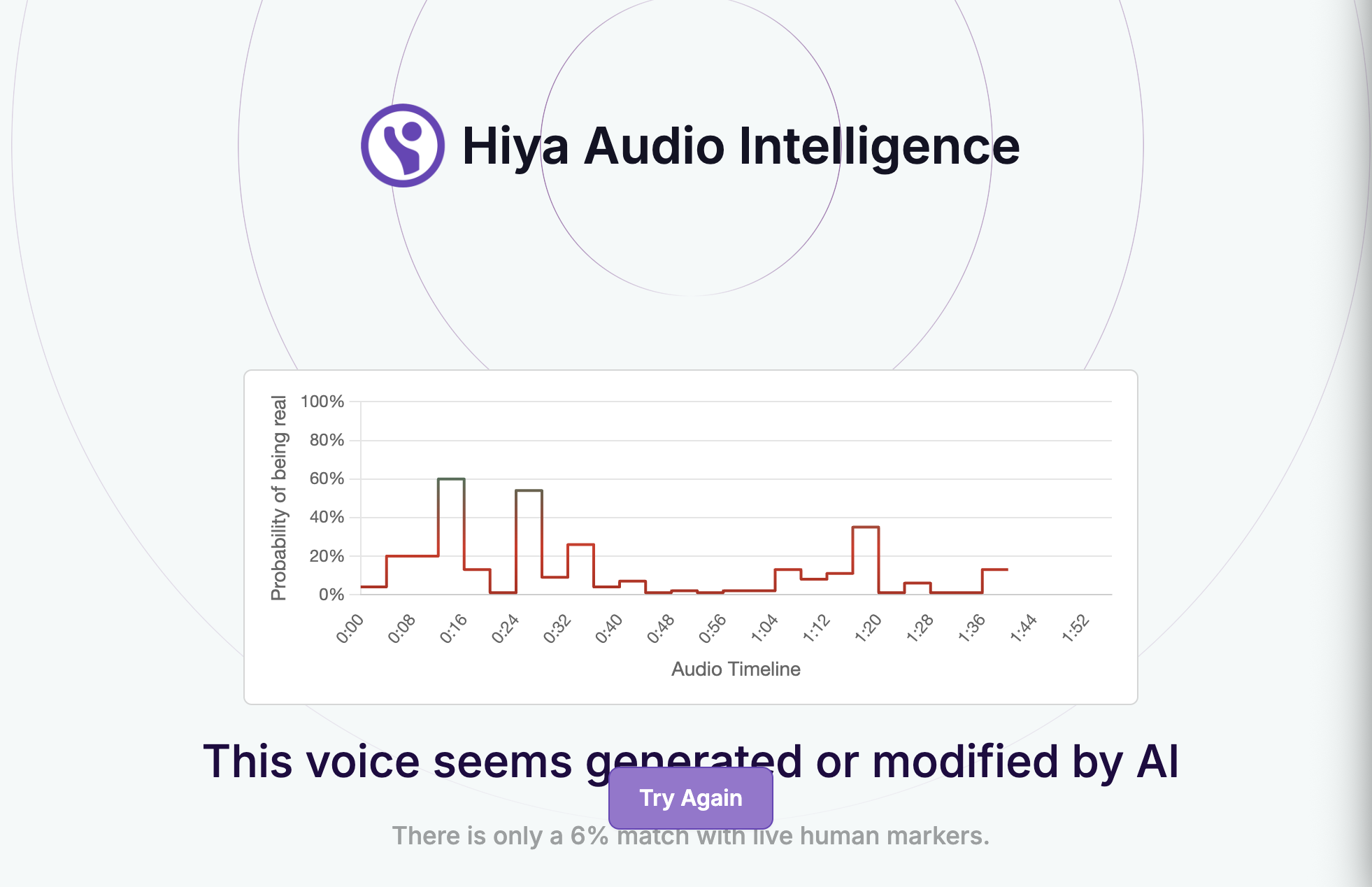

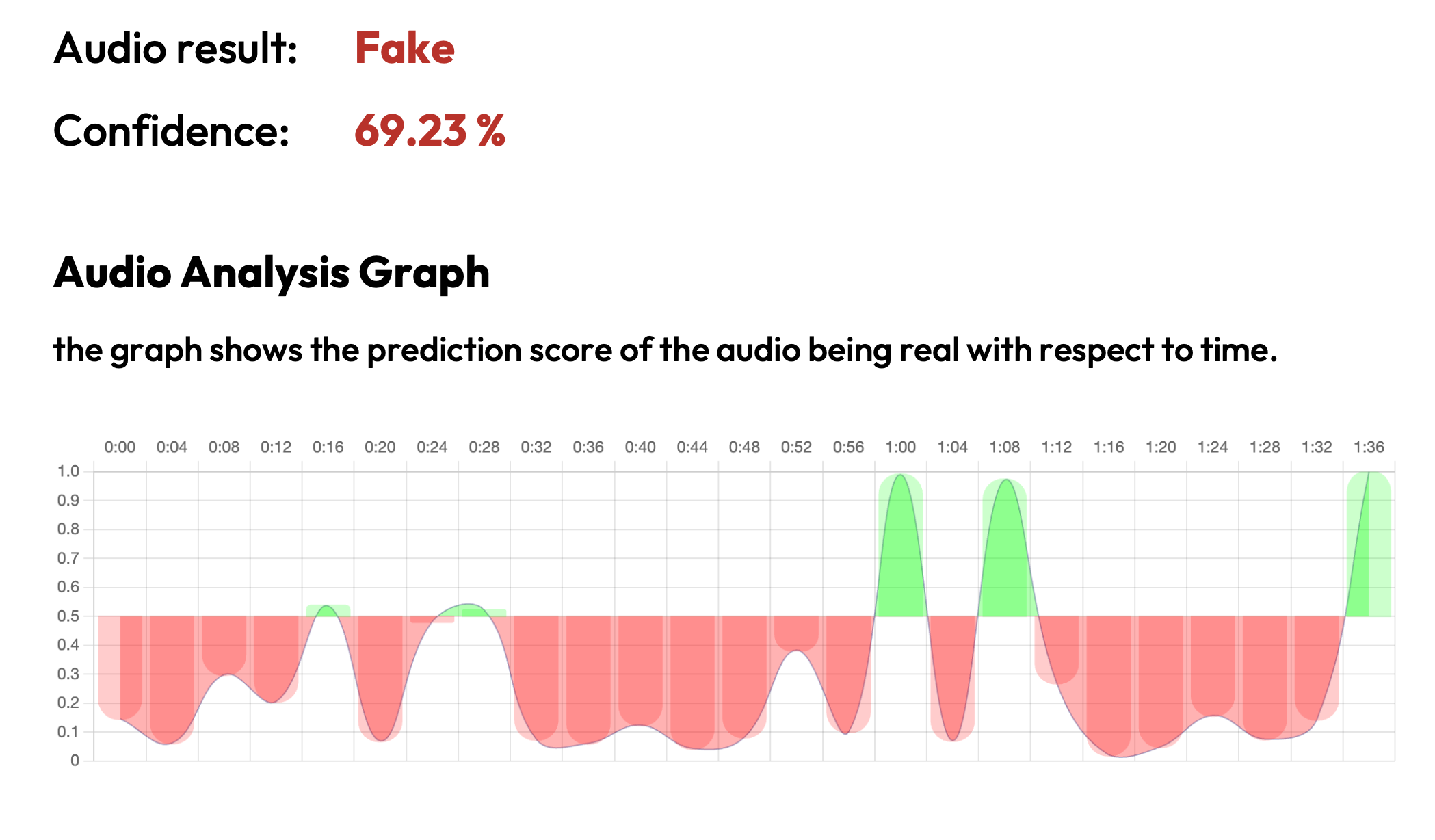

To discern the extent of A.I. manipulation in the video we reviewed, we put it through A.I. detection tools.

The voice tool of Hiya, a company that specialises in artificial intelligence solutions for voice safety, indicated that there is a 94 percent probability that the audio track in the video was generated or modified using A.I.

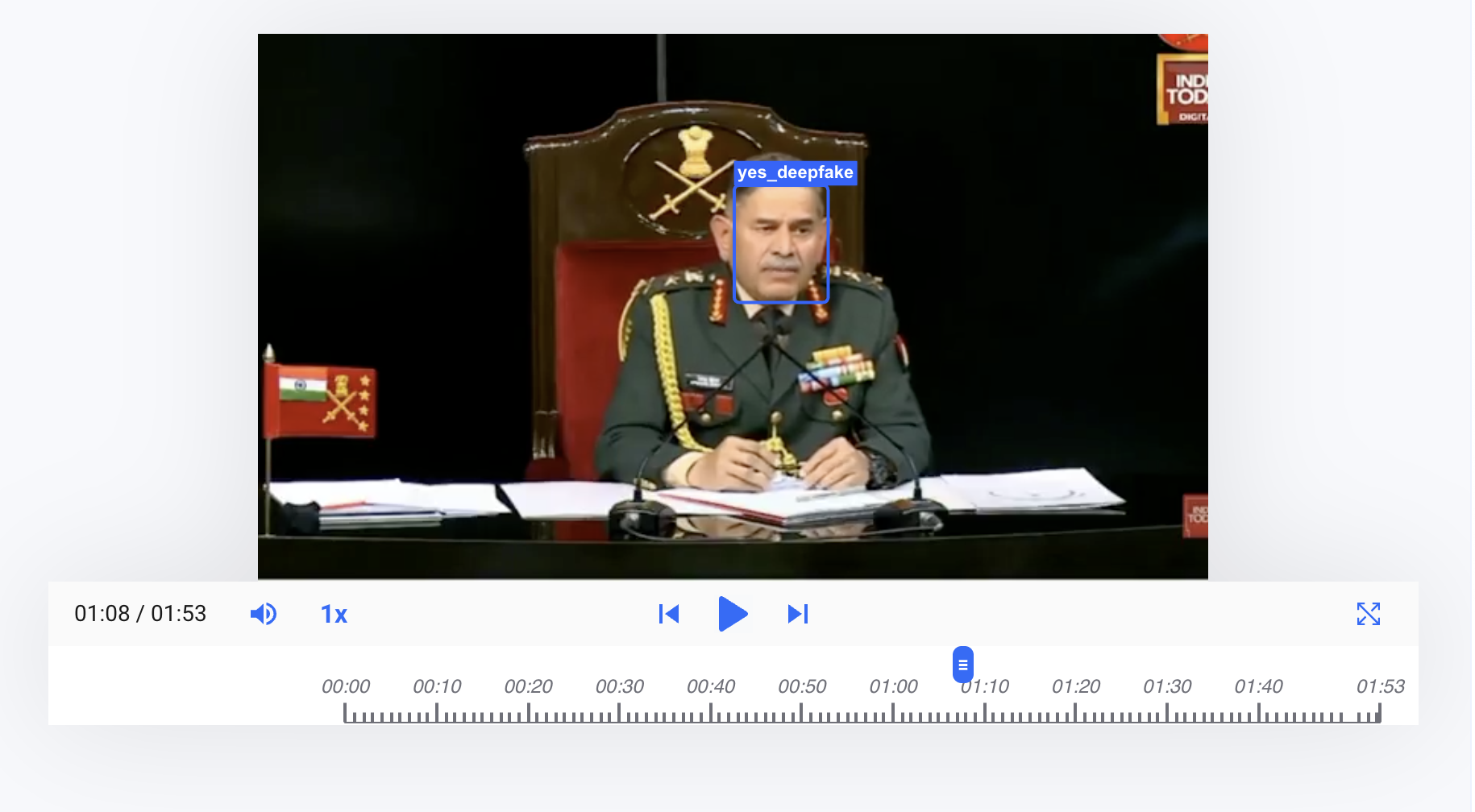

Hive AI’s deepfake video detection tool highlighted a few markers of A.I. manipulation in the video but only on the general’s face. Their audio detection tool indicated that the entire audio track, but for a 10-second segment, is A.I.-generated.

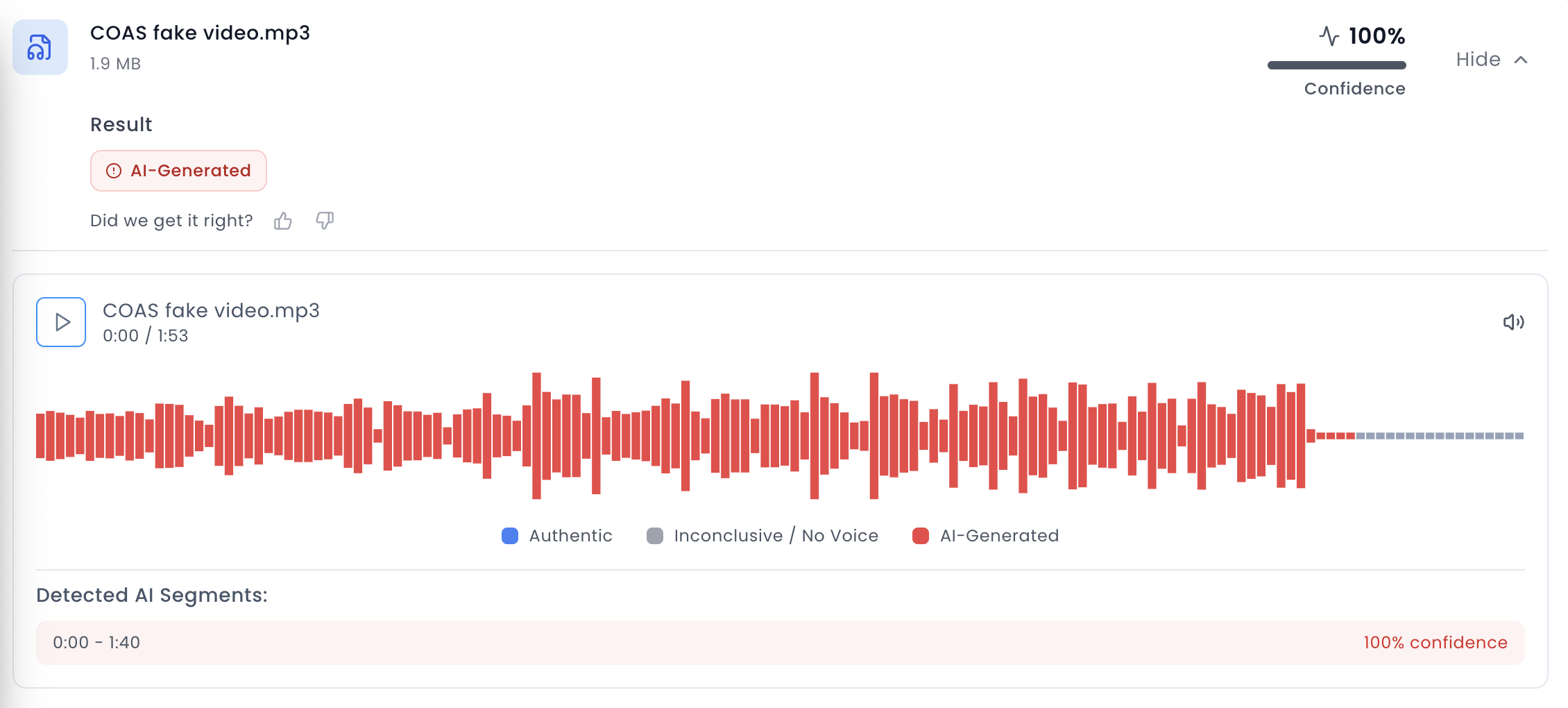

We also ran the audio track through the advanced audio deepfake detection engine of Aurigin.ai, a Swiss deeptech company. The results indicated 100 percent confidence in the audio track being A.I.-generated.

We further put the audio track through the A.I. speech classifier of ElevenLabs, a company specialising in voice A.I. research and deployment. The results that returned indicated that it is “very unlikely” that the audio track used in the video was generated using their platform. However, a further analysis by the team established that the audio track is synthetic or A.I.-generated.

To get an analysis on the video we reached out to Contrails AI, a Bangalore-based startup with its own A.I. tools for detection of audio and video spoofs.

The team ran the video through audio and video detection models. The results that returned indicated that the audio track was likely A.I.-generated. However, their detectors gave inconsistent results for the video track, they attributed that to the colour contrast burst and grainy pixels around the general’s face region.

Manual analysis helped them establish that the video had clear signs of lip-syncing using A.I. They added that in the audio track, the voices of both the supposed speakers —the general and the reporter—sounded like their respective voices, indicating the use of A.I. voice cloning technique.

To get expert analysis on the video we reached out to our partners at RIT’s DeFake Project. Kelly Wu from the project shared the same source video that we have linked to above. Ms. Wu noted that she ran an online search using the name and media house affiliation used for the supposed reporter in the video, and concluded that a person with those details does not exist.

Wu added that though the supposed reporter’s mouth is often covered by the microphone or the person sitting in front of him, she noticed a dissonance between the sound and the speech. She pointed to the moment when the word “difference” can be heard in the audio track and the “ce part” of the sound comes after the microphone moves away from the man holding it, but the audio level does not change.

She also shared a screenshot from the doctored video where the general’s mouth is oddly showing on his hand, as he moves it to take off his glasses. She explained that this is a telling artefact as the mouth is the regenerated part in the video.

Akib Shahriyar from the project stated that since it appears that the entire frame has not been regenerated, the video has likely been produced using a lip-sync based method instead of newer image-to-video animation models that typically re-render the full face or frame. Mr. Shahriyar suggested that the wav2lip-style approach could have been used, which involves the use of a speech-to-lip generation code repository available online for video generation.

Shahriyar added that the low resolution of the clip likely helps mask the common lip-sync artefacts. He noted that the likely generation methods used in the video struggle to faithfully reproduce fine-grained mouth details, such as teeth, tongue motion, and inner-mouth structure at higher resolutions. He shared that this makes lip-region-only manipulation a plausible and strategic choice by the adversary.

He noted that the video seemed to be stitched together from multiple segments of the original footage, with the mouth region regenerated to match the fabricated audio attributed to the general. Pointing to a specific timecode from the segment with the general’s visuals, he highlighted a “double-lip generation artefact”, which is “consistent with imperfect temporal alignment during lip-sync generation”. He was referring to the visual oddity that occurs when the speech and the lip movements do not synchronise during the generation process.

He concluded that all the artefacts strongly indicated that the video was manipulated using localised lip-sync generation rather than full-frame synthesis.

On the basis of our observations and expert analyses, we can conclude that the video is fabricated. An A.I.-generated audio clip was used with the general’s and the reporter’s visuals to peddle a false narrative around Operation Sindoor.

(Written by Debraj Sarkar and Debopriya Bhattacharya, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.

You can read the fact-checks related to this piece published by our partners:

AI-Manipulated Video of COAS Dwivedi and a Journalist Over Op Sindoor Viral

Video Of Heated Exchange Between Reporter And COAS Is A Deepfake