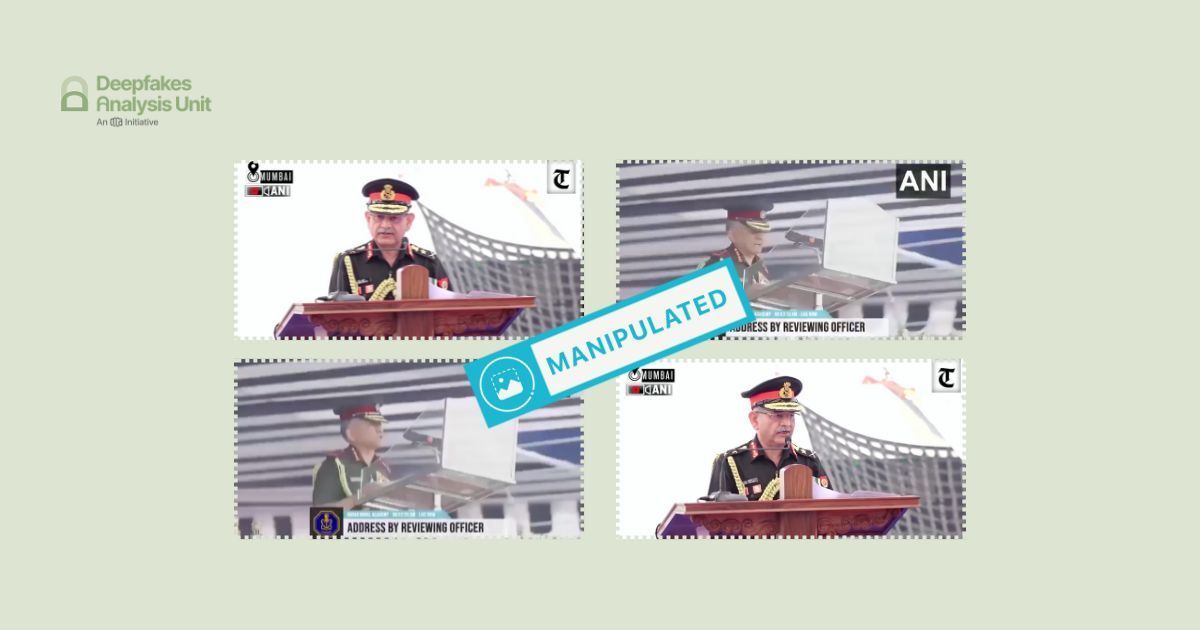

The Deepfakes Analysis Unit (DAU) analysed a video which apparently shows Prime Minister Narendra Modi supposedly claiming that the Indian Army gives preference to a particular faith and ideology. After putting the video through A.I. detection tools and getting our expert partners to weigh in, we were able to conclude that the video was manipulated with synthetic audio.

The one-minute and 29 seconds video in Hindi was discovered by the DAU during social media monitoring. We were able to access an archived version of the video on a partner fact-checker’s website. The video was embedded in a post on X, formerly Twitter, by an account withheld in India in response to a legal demand; we will refrain from sharing further details about the account.

We do not have any evidence to suggest whether the suspicious video originated from an account on X or elsewhere.

Mr. Modi is captured in a medium close-up in the video, his backdrop is saffron with motifs on it. He appears to be standing, looking straight ahead at something or someone in front of him. Two microphones placed on a lectern with saffron and white embellishments are visible before him, which suggests that he’s addressing an audience.

The cutaways in the video comprise visuals of crowds and a group of dignitaries seated on the stage from where Modi is apparently speaking. An arrangement of the words: “Rashtra Prerna Sthal” in Hindi borders the stage from the front and is visible in the wide shot of the stage. The backdrop of the dignitaries comprises names of political leaders written in Hindi, a still of a public space with green patches and statues, and a slogan also in Hindi. The crowds seem to be holding posters with Modi’s face, and waving the Indian flag and banners in Hindi.

A male voice recorded in first person over Modi’s video track claims that it would like to clarify to “Congress” “leaders” the reason behind the supposed "saffronisation" of the Indian army. The voice adds that since “the nation is a Hindu nation”, the “army will also work for the Hindu nation”. And states, that “the duty of the army is not only to protect the borders, but also to protect the identity of the nation and its values”.

The voice further mentions that the “people of Bangladesh” should not have the misconception that “Pakistan or someone else would come to their rescue”. The voice makes a derogatory remark against Pakistan's Field Marshal Asim Munir, and adds that, “we know how to harm him,” and “know the reality of his army".

The voice goes on to say that, “we remember how we broke them in 1971 and created Bangladesh”. It threatens that, “if need be we can display the same strength again” and “snatch away Bangladesh from Bengalis”. The video ends with the voice announcing that “India does not live in delusion and does not spare its enemies.”

The overall video quality is good. The lip movements of the purported speaker synchronize well with the audio track. His upper set of teeth seem to disappear in some frames and overlap with the upper lip in some other frames; and the lower set of teeth appear to change shape between frames.

In a few instances, his throat seems to inflate as he appears to speak, an unnatural effect of chin elongation occurs simultaneously. His eyes appear to blink rapidly in a rather unusual manner throughout the video. The left lens of his rimless glasses seems to lose its outline and melds into the skin across several frames.

The voice attributed to Modi in the video sounds somewhat similar to his real voice, as heard in his recorded speeches available online. However, it lacks the cadence, intonation, and pauses that are characteristic of his delivery; overall it sounds scripted and monotonous. There is no ambient sound in the audio track despite visuals of crowds in the video. An echo-like sound effect and a static sound can be heard throughout.

We undertook a reverse image search using screenshots from the video and traced Modi’s visuals to this video published on Dec. 25, 2025 from the official YouTube channel of the Prime Minister’s Office of India. Modi’s clothing and backdrop in this video and the one we reviewed are identical. His body language, however, is not an exact match across the videos. The audio in both videos is in Hindi but the content is different.

In the source video Modi’s hands appear to move in an animated manner and he looks around while addressing the crowd. A thumbnail image of his is visible in the bottom-right corner of the video frame.

Visuals of crowds and dignitaries sitting on the stage are part of the source video and their cheering sounds make up for the ambient sound. An echo-like sound effect is audible in the source video but it’s not the same as that in the doctored video. The static sound heard in the doctored video cannot be heard in the source video.

The unnatural eye-movements, the left spectacle lens appearing to blend into the face, the throat appearing to oddly puff up and the lengthening of the chin— none of these visual anomalies are observable in Modi’s visuals in the source video.

It appears that short clips of the crowds and the dignitaries were lifted from the source video and used as is in the doctored video but that has not been the case with Modi’s visuals. In addition to the body language and hand gestures not being identical in the two videos, the visual anomalies highlighted above also point to likely digital manipulation in his visuals used in the doctored video.

The narrative being peddled through this video is similar to some of the other videos debunked by the DAU recently, which too falsely linked a particular religion and ideology to the Indian army.

To discern the extent of A.I. manipulation in the video we reviewed, we put it through A.I. detection tools.

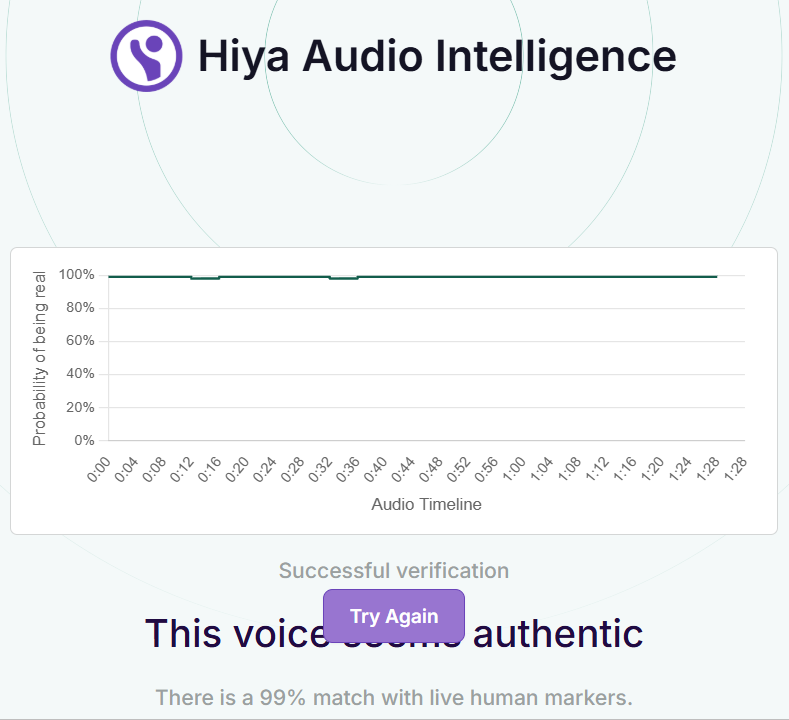

The voice tool of Hiya, a company that specialises in artificial intelligence solutions for voice safety, indicated that there is only a one percent probability that the audio track in the video was generated or modified using A.I.

Hive AI’s deepfake video detection tool did not highlight any markers of A.I. manipulation in the video track. Their audio detection tool indicated that the entire audio track is “not A.I.-generated”.

We ran the audio track through the advanced audio deepfake detection engine of Aurigin.ai, a Swiss deeptech company. The results indicated 96 percent confidence in the audio track being partially A.I.-generated.

We also put the audio track through the A.I. speech classifier of ElevenLabs, a company specialising in voice A.I. research and deployment. The results that returned indicated that it is “very unlikely” that the audio track used in the video was generated using their platform. However, a further analysis by the team established that the audio track is synthetic or A.I.-generated.

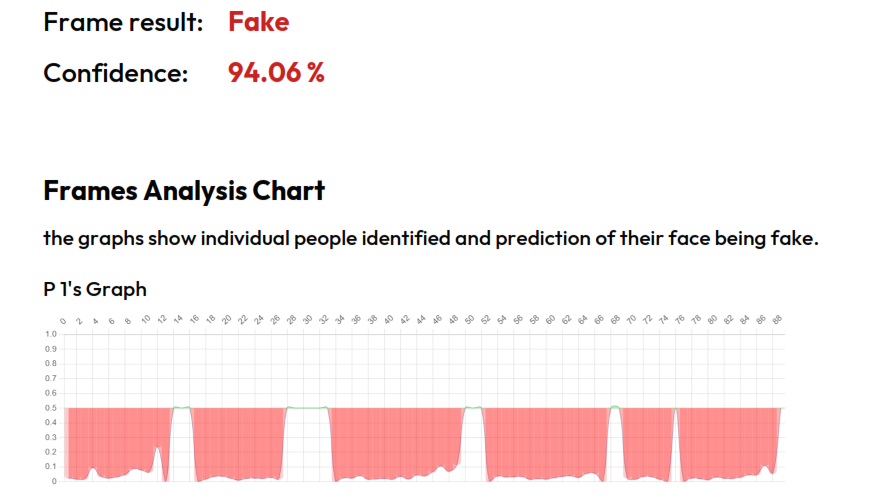

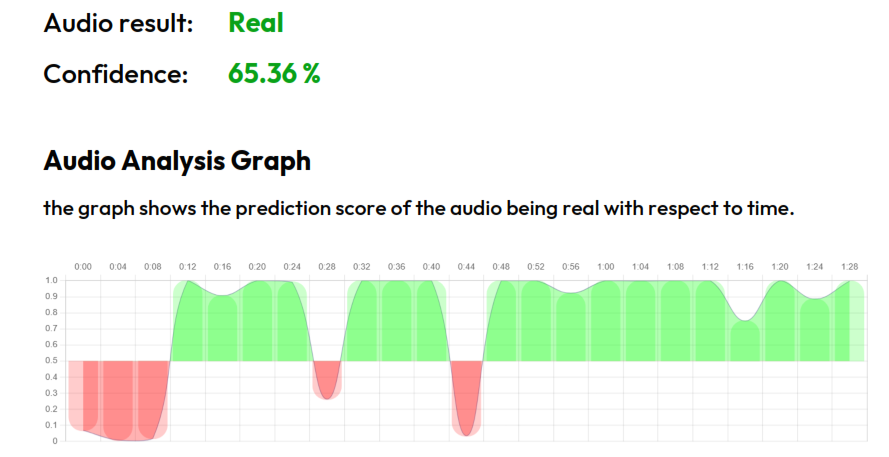

To get an analysis on the video we reached out to Contrails AI, a Bangalore-based startup with its own A.I. tools for detection of audio and video spoofs.

The team ran the video through audio and video detection models. The results that returned indicated manipulation in the video track with high confidence but gave low confidence to manipulation in the audio track.

They stated that a lip-sync or lip-reanimation based A.I. technique has been used to synchronize the subject’s mouth area with the audio track. They added that visual artefacts are clearly visible near the lower portion of the subject’s beard.

They noted that their audio spoof detection model pointed to the audio being real, which may be a false prediction. They explained that the use of some latest models and techniques in the audio generation could have led to the results not being accurate. However, they added that the audio sounds highly monotonous indicating it could be a studio recording or was generated using a voice cloning A.I. technique.

To get further expert analysis on the video, we escalated it to the Global Online Deepfake Detection System (GODDS), a detection system set up by Northwestern University’s Security & AI Lab (NSAIL). The video was analysed by two human analysts and run through 22 deepfake detection algorithms for video analysis.

Of the 22 predictive models, 14 gave a higher probability of the video being fake and the remaining eight gave a lower probability of the video being fake.

In their report, the team observed that the subject’s movements, including his mouth movements, appear unnaturally stiff and awkward throughout the video. They noted that the purported voice of the subject lacks natural tonal and cadence variations that are characteristic of human voices; as we pointed to above as well. They added that the poor quality of the audio and the echo can make it difficult to ascertain other potential speech manipulations.

The team mentioned that the subject’s beard appears to flow below his chin in several frames. They pointed to specific timecodes to highlight this visual anomaly and identified this as “leftover visual artifacts” after he appeared to talk. They also highlighted other timecodes where the subject’s teeth appear to overlap with his lips and change shape.

The team also observed that the outline and lens of the subject’s glasses on the left eye appear blurred for the majority of the video. They drew attention to that eye seeming to droop lower than the subject’s right eye and changing shape throughout the video. As per their analysis, there seems to be a colour difference between the subject’s eyes with the left eye appearing to be slightly more grey or less opaque.

In conclusion, the team stated that the video is likely manipulated via artificial intelligence.

On the basis of our observations and expert analyses, we can conclude that Modi’s visuals were used with an A.I.-generated audio track to peddle a false narrative about him claiming that the Indian Army favours a particular faith and ideology.

(Written by Debopriya Bhattacharya and Debraj Sarkar, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.

You can read the fact-checks related to this piece published by our partners:

Did PM Modi Warn Bangladesh, Talk About ‘Saffronising’ Indian Army?

This Video of PM Modi Reminding Bangladesh of 1971 War Is AI-Manipulated