The Deepfakes Analysis Unit (DAU) analysed a video that apparently shows India’s Road Transport and Highways Minister Nitin Gadkari, and Rajdeep Sardesai, a television journalist, promoting a financial investment platform supposedly backed by the government. On analysing the video, we noticed that the script of the audio track is identical to that used in another financial scam video that we recently debunked. After getting experts to weigh in we were able to conclude that the video was manipulated using A.I.-generated audio.

The two-minutes and 23-seconds video in English was escalated to the DAU by a fact-checking partner for analysis. The packaging of this video as a television interview and its duration are also similar to the manipulated video we pointed to above, which apparently featured Mr. Sardesai and India's Finance Minister Nirmala Sitharaman.

The video opens with a split screen where two video streams play simultaneously. On the left, Sardesai can be seen in a medium close-up apparently talking to the camera from a studio setting. On the right a string of tiny clips show Indians on a busy street, at a construction site, a woman arranging flowers, and a cutout image of Mr. Gadkari against the map of India. Even as both the video streams play simultaneously the accompanying audio is only heard with the one apparently featuring Sardesai.

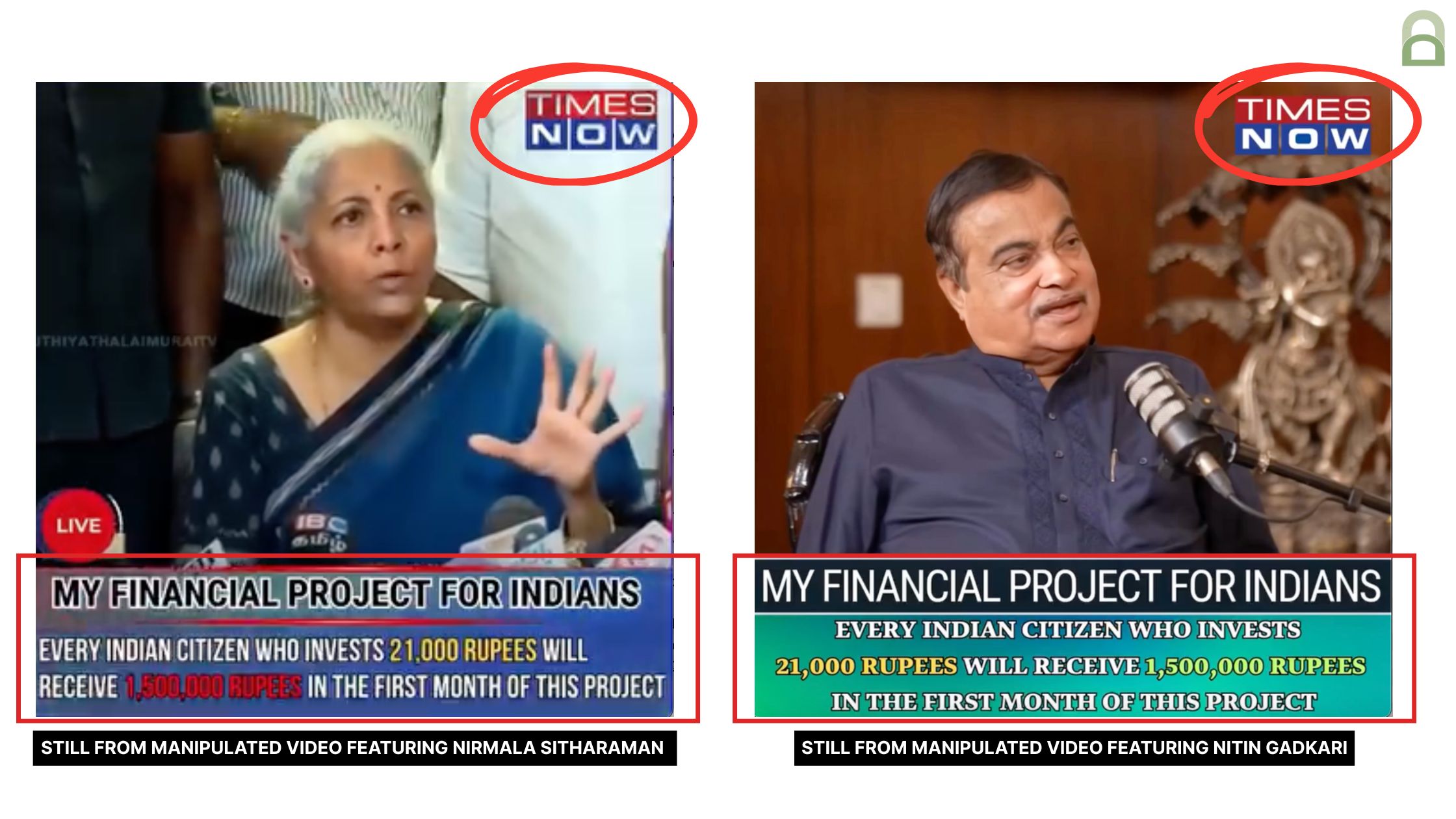

After the initial 12 seconds the video cuts to visuals of Gadkari followed by a segment with only Sardesai, making it appear that he’s asking Gadkari a question; this supposed sequence of question and answer repeats once more before the video ends. A logo resembling that of Times Now, an English-language news channel in India, is visible in the top right corner of the video frame in the segments featuring Gadkari.

Bold, static text graphics in English that read “My financial project for Indians” appear throughout at the bottom of the video frame. Below that another set of text graphics read: “Every Indian citizen who invests 21,000 rupees will receive 1,500,000 rupees in the first month of this project”. The numbers in the graphics are highlighted in yellow, green, and everything else is in white.

The visuals featuring Gadkari show him seated in a chair, apparently speaking into a podcast microphone placed before him. His gaze never meets the camera, his hands move in an animated manner, making it seem that he is addressing someone seated across from him. The camera angle alternates between a tight medium close-up and a wider shot, altering his backdrop from a wood-panelled wall to a wooden door with glass panes and a metal statuette in some frames.

Sardesai’s backdrop comprises a view of a skyline. A laptop with a red sticker bearing the words “Parul University NAACA++” on its lid, and a partially visible glass of water are seen in front of Sardesai.

Sardesai’s and Gadkari’s lip movements appear puppet-like but are mostly consistent with their respective audio tracks except for some parts in which there is a visible lag between the audio and video. Their upper set of teeth are not visible in their respective visuals.

Sardesai’s lower set of teeth appear like a bright white patch in some frames. Gadkari’s lower set mostly appears like a dark brown patch; in some frames his lower lip seems to protrude, a layer of skin oddly appears between his lips in a few other frames. In some instances his chin and cheekbone appear static even as his mouth is visibly moving.

The video has abrupt edits. In the segments featuring Gadkari, which are longer compared to Sardesai’s segments, there are several jump cuts and there is at least one instance where the audio gets cut in the middle of a sentence. In a segment featuring Sardesai his eyes appear shut for about three seconds even as he seems to be talking.

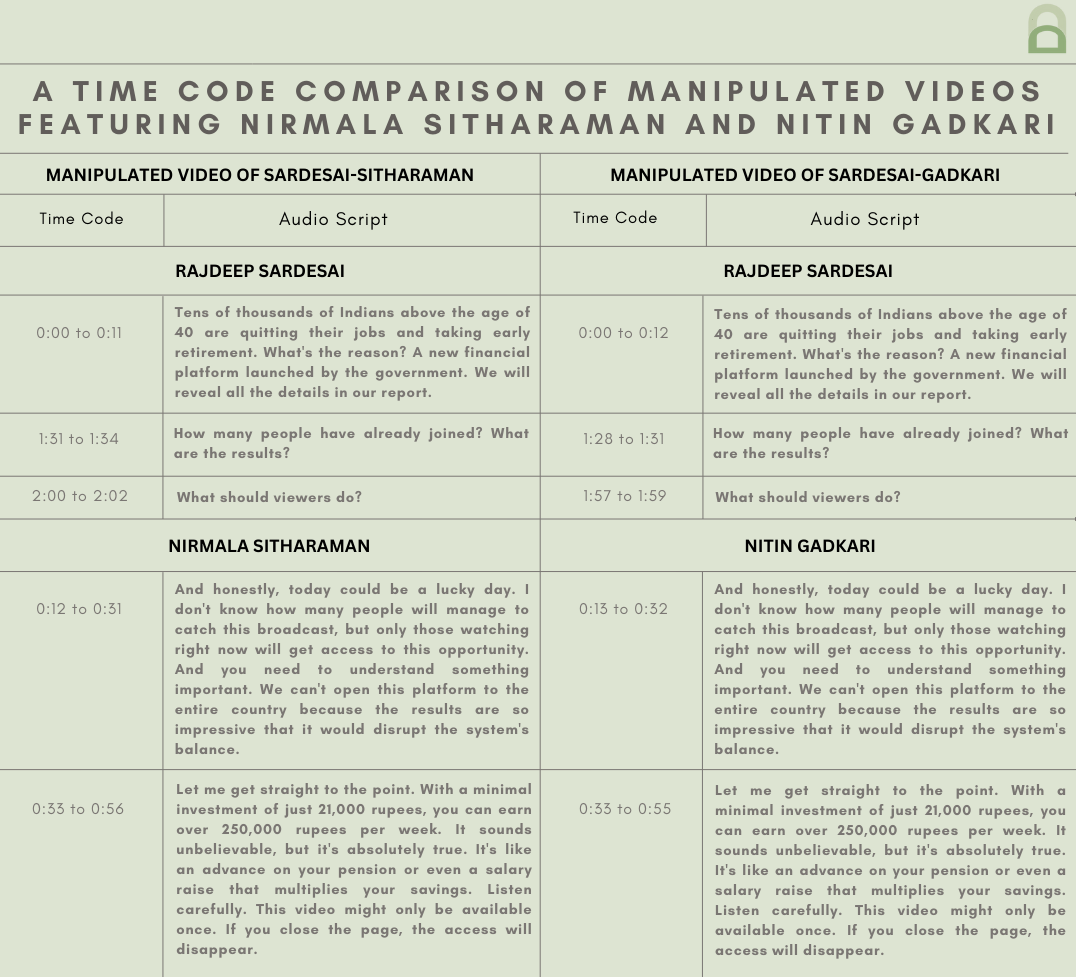

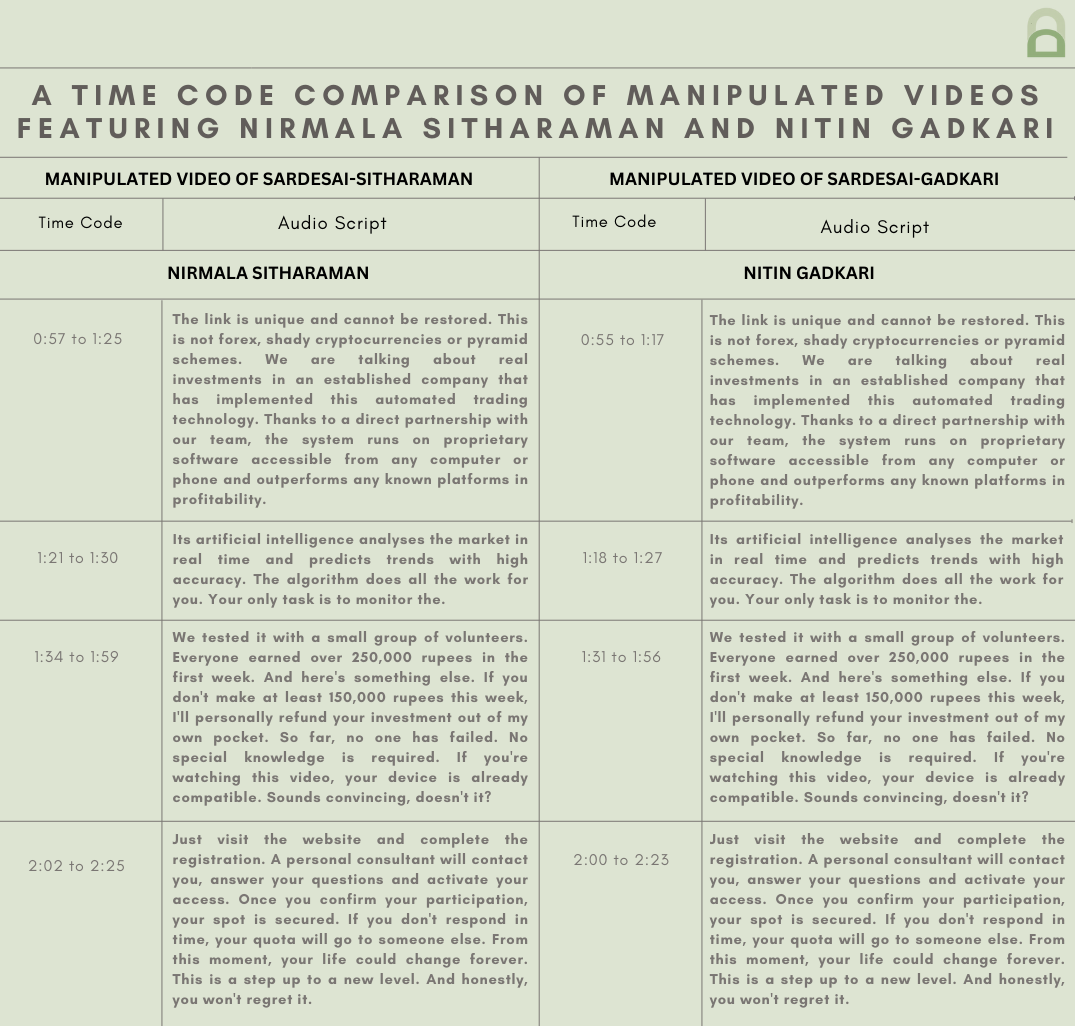

Shared below is a table that compares the audio script of this video with the one we recently debunked to underscore how the verbatim script has been used in both the videos. Yet again there is a mention of “21,000 rupees” in this video, which too has been a continuous trend that we have observed in financial scam videos manipulated using A.I.

We have highlighted the copycat nature of this kind of content through our previous reports as well, such as here and here. We do not intend to give oxygen to bad actors behind such harmful and misleading content, our endeavour is to inform our readers.

On comparing the voice attributed to Sardesai and Gadkari, respectively, with that heard in their recorded videos available online, similarity can be established between their real and purported voice. The accent and diction also sound similar in Sardesai’s case in both voices. However, Gadkari’s diction is different from the one captured in the video while the accent comes close to his actual accent. Static noise can be heard in the audio track with his visuals.

The overall delivery of the purported speakers sounds monotonous and is devoid of any intonation or natural pauses characteristic of human speech.

We undertook a reverse image search using screenshots from the video and traced Sardesai’s clip to this video, published on July 11, 2025 from the official YouTube channel of India Today, an English-language news channel in India. Gadkari’s clip was traced to this video published on June 16, 2025 from the official YouTube channel of AIM Network, which focuses on A.I.

The clothing and body language of Sardesai and Gadkari in the video we reviewed and their original videos are identical. However, their respective backdrops are not an exact match as the manipulated video has used more zoomed-in frames from the source videos, cropping out portions of the background as well as the foreground.

The interviewer’s microphone in the foreground, a blurred yellow board placed next to the metal statuette in the background are part of Gadkari’s original video but not the doctored video. A glass of water and a mug behind it bearing the India Today logo are placed before Sardesai in the original video. However, in the doctored video the glass is edited vertically with only half of it visible; the mug and the extended frame of the skyline in the background are absent.

Gadkari and Sardesai speak in Hindi and English, respectively, in their source videos and neither of them mention any financial platform. There is no static noise in Gadkari’s source video.

Text graphics in English and Hindi appear momentarily at the beginning of Gadkari’s source video while the logo of AIM Network is visible in the top-right corner of the video frame throughout. Neither of these are part of the doctored video. In Sardesai’s original video, India Today logos can be seen in the top-right and bottom-right corner of the video frame, neither can be spotted in the manipulated video.

Both the source videos are roughly 50 minutes long and have been shot in different frames. Sardesai’s video also includes varied footage and several other subjects; specific clips that feature only him have been lifted and stitched together with clips of Gadkari to create the manipulated video.

Since we were able to establish that the audio track was not authentic and given that the voices sounded like those of the subjects targeted, it was highly likely that the audio was A.I.-generated. We reached out to experts to seek their analysis.

We ran the audio track through the A.I. speech classifier of ElevenLabs, a company specialising in voice A.I. research and deployment. The results that returned indicated that it was “very unlikely” that the audio track used in the video was generated using their platform.

When we reached out to ElevenLabs for a comment on the analysis, they told us that based on technical signals analysed by them they were able to confirm that the audio track in the video is synthetic or A.I.-generated. They added that they have taken action against the individuals who misused their tools to hold them accountable.

To get an expert analysis on the video, we escalated it to the Global Deepfake Detection System (GODDS), a detection system set up by Northwestern University’s Security & AI Lab (NSAIL). The video was analysed by two human analysts, run through 22 deepfake detection algorithms for video analysis, and seven deepfake detection algorithms for audio analysis.

Of the 22 predictive models, 10 gave a higher probability of the video being fake and the remaining 12 gave a lower probability of the video being fake. Six of the seven predictive models gave a high confidence score to the audio being fake and only one model gave a low confidence score to the audio being fake.

In the report, they highlighted several visual discrepancies which point to the video being artificially manipulated. They noted that Sardesai’s eyelids appear to be closed at a specific timecode and yet his eyes seem to be subtly visible as an overlay on top of the eyelid area.

They also drew attention to another timecode where Gadkari’s lips seem to separate from his mouth while speaking, creating an unnatural appearance, which we too pointed to above. They further noted two instances where his mouth moves while the visuals of the rest of face seem to transition, resembling what they refer to as a “visual overlay discrepancy”.

They also pointed to multiple jump cuts in the video track resulting in Gadkari’s position shifting abruptly without any interruption in the audio flow. They added that this could be due to post-production editing.

They corroborated our observations about both the voices lacking natural tone and cadence variations that are characteristic of human voices.

The team's overall findings pointed to the video being likely generated via artificial intelligence.

Based on our findings and analyses from experts, we can conclude that unrelated clips featuring Gadkari and Sardesai were used with an A.I.-generated audio track to fabricate yet another financial scam video with an all too familiar script.

(Written by Debopriya Bhattacharya and Debraj Sarkar, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.

You can read below the fact-checks related to this piece published by our partners:

Fact Check: Viral Video Of Nitin Gadkari Endorsing Finance Platform Is A Deepfake