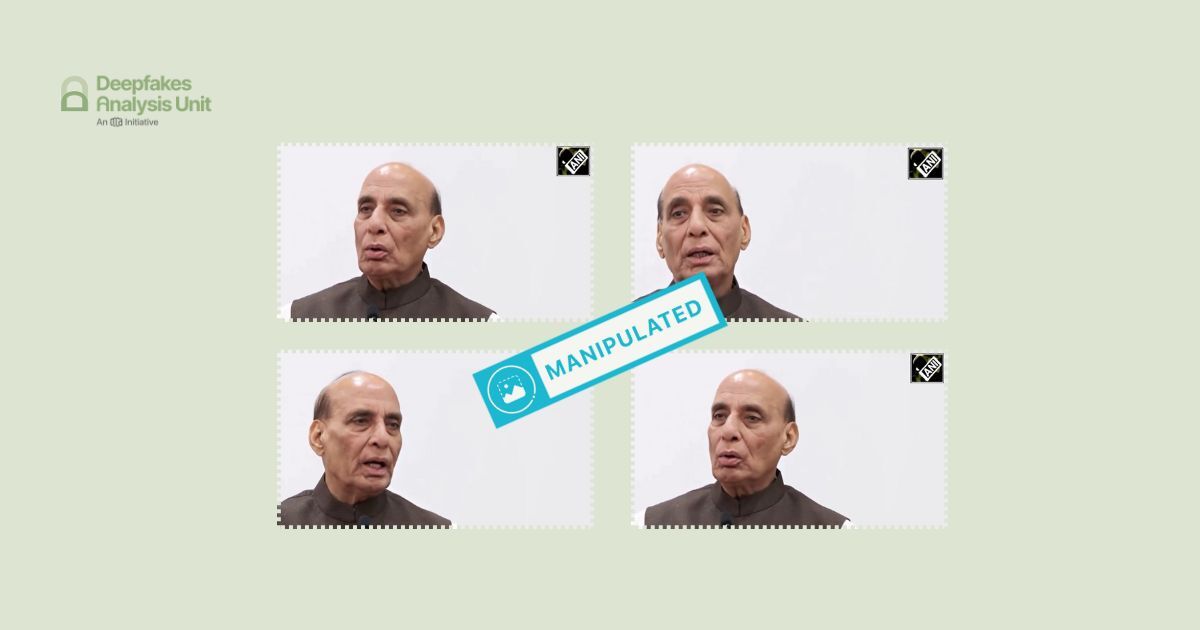

The Deepfakes Analysis Unit (DAU) analysed a video which apparently shows Rajnath Singh, India’s Defence Minister, supposedly making a public statement about India having lost four Rafale aircraft to Pakistan. After putting the video through A.I. detection tools and getting our expert partners to weigh in, we were able to conclude that the video was fabricated using A.I.-generated audio.

The 28-second video in Hindi was discovered by the DAU on X, formerly Twitter, during social media monitoring. It was posted from several accounts on the platform and most of those had identical accompanying text.

One of those accounts posted the video on Sept. 25 with text in English that read: “Indian Defense Minister Rajnath Singh praised PM Modi for his remarkable vision in stopping a major Pakistani assault, crediting Trump’s help in brokering a truce. Singh highlighted that Modi’s leadership spared 20 cities and limited loss to four Rafale jets.”

We do not have any evidence to suggest whether the video originated from any of the accounts on X or elsewhere.

The fact-checking unit of the Press Information Bureau (PIB), which debunks misinformation related to the Indian government recently posted a fact-check from their verified handle on X, debunking the video attributed to Mr. Singh.

In the video, Singh is captured in a medium close-up while standing and speaking into a microphone. He seems to look around as his hands move in an animated manner, giving the impression that he is addressing an audience.

The backdrop appears to be an off-white wall. An incomplete graphic in dark grey resembling the curve of a walking stick is visible in the bottom-left corner of the video frame. A logo resembling that of ANI, an Indian news agency, is visible in the top-right corner of the frame.

Singh’s lip movements appear to be in sync with the accompanying audio track, however his mouth appears to move in a puppet-like manner. His teeth seem to disappear and reappear between frames, his right cheekbone appears static even as his mouth is visibly moving, and his eyes look unusually shiny and blink rather slowly.

On comparing the voice attributed to Singh with his recorded videos available online, we noticed a striking similarity in the voice, diction, and accent. However, the overall delivery in the video under review is monotonous, devoid of any change in intonation or pitch. A static noise could be heard in the audio track throughout.

We undertook a reverse image search using screenshots from the video and traced Singh's clip to this video published on Sept. 22, 2025 from the official YouTube channel of ANI. Singh’s clothes, and backdrop in this video match exactly with those in the video under review.

The body language of Singh changes throughout the video we traced, which is almost an hour long. Our first attempt at tracing the clip identical to the one seen in the video we reviewed involved comparing the audio tracks in the two videos as both are in Hindi. Only about five-seconds matched in terms of the words heard, the remaining 23 seconds of the audio could not be heard anywhere in the video we located.

When we compared the video tracks over the same audio tracks we observed that Singh’s body language and gestures were different in the two clips. His eye movements looked more natural in the video we traced and did not look shiny as they did in the manipulated video.

There were no visible jump cuts or transitions in the manipulated video and it looked fairly seamless. So, either a clip had been lifted from another segment of the source video or it had been generated. Our partner GetReal Security, co-founded by Dr. Hany Farid and his team, who specialise in digital forensics and A.I. detection, helped us identify the clip in the source video, which matched with that in the doctored video.

The source video carries a logo of ANI in the top-right corner of the frame and a YouTube logo in the bottom-right corner. The latter is not part of the manipulated video while the former is visible in that video as well. The graphic resembling a walking stick top, seen in the doctored video is part of the source video too.

In the original audio track, Singh’s voice sounds muffled and is accompanied with ambient sound, and static noise, which is different from that heard in the manipulated video. The speech in the doctored video is more coherent, not muffled, and does not have any ambient sound.

The video being addressed through this report is similar to most videos that have been debunked by the DAU in the wake of recent military escalation between India and Pakistan. Such manipulated videos peddle a false narrative about India’s losses and are typically created by using original or generated video clips of top Indian military officials or ministers. The audio tracks in these videos are either fully A.I.-generated or in some cases original audio clips are spliced with A.I.-generated audio.

Shared below is a table that compares the English translation of the audio track from the doctored video with a segment of the audio track from the original video featuring Singh. We want to give our readers a sense of how similar the audio tracks are and how it can be misleading. We, of course, do not intend to give any oxygen to the bad actors behind this content.

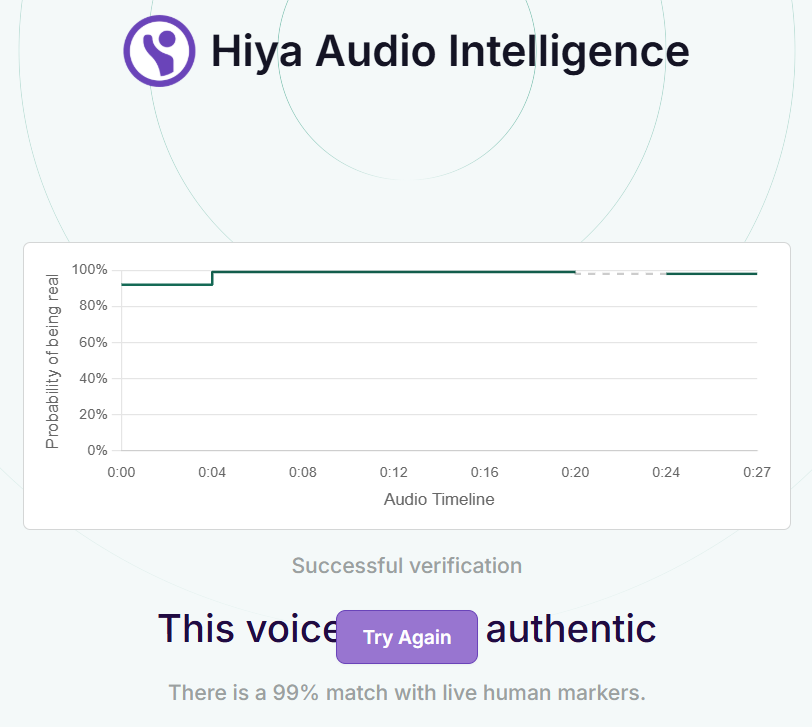

To discern the extent of A.I. manipulation in the video under review, we put it through A.I. detection tools.

The voice tool of Hiya, a company that specialises in artificial intelligence solutions for voice safety, indicated that there is only a one percent probability of the audio track in the video having been generated or modified using A.I.

Hive AI’s deepfake video detection tool highlighted markers of A.I. manipulation in two segments of the video track. However, their audio detection tool indicated that the entire audio track is “not A.I.-generated”.

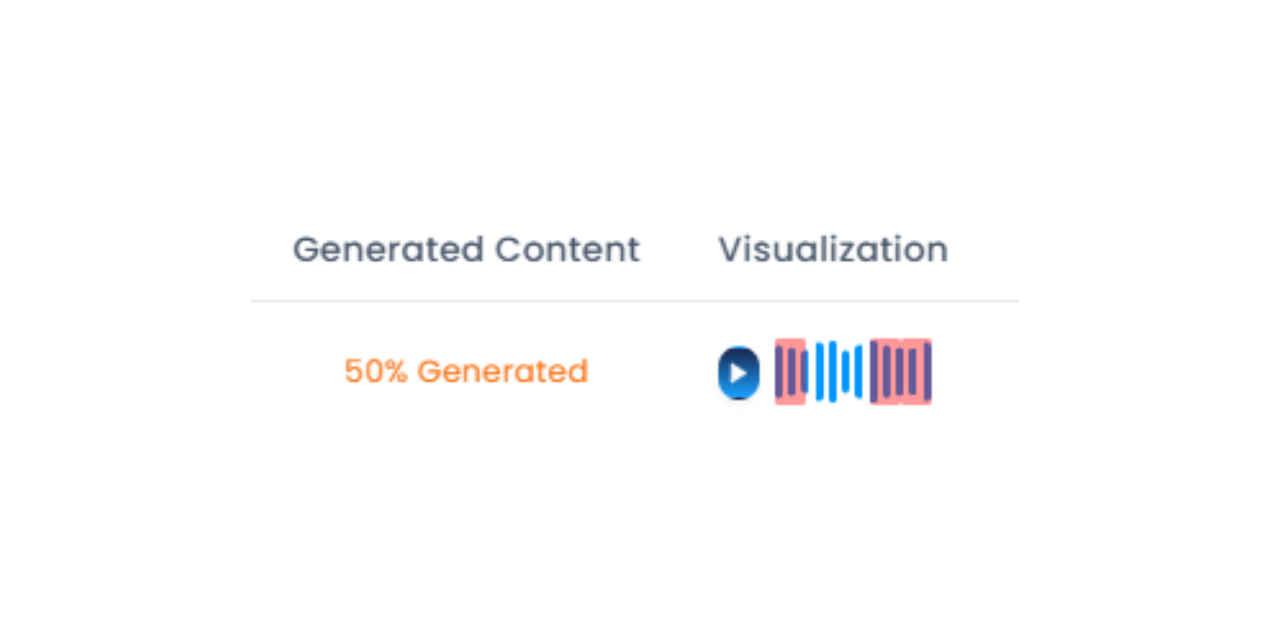

We ran the audio track through the advanced audio deepfake detection engine of Aurigin.ai, a Swiss deep-tech company. The result that returned indicated that 50 percent of the audio track is A.I.-generated. The tool highlighted segments in the beginning and most of the second half of the audio track as generated.

We also ran the audio track through the A.I. speech classifier of ElevenLabs, a company specialising in voice A.I. research and deployment. The results that returned indicated that there is a 61 percent probability that the audio track in the video was generated using their platform; the result was classified as “uncertain”.

When we reached out to ElevenLabs for a comment on the analysis, they told us that they conducted a thorough technical analysis but were unable to conclusively determine that the reported synthetic audio originated from their platform.

They noted that Singh is already included in their safeguard systems to help prevent unauthorised cloning or misuse of his voice, and they confirmed that if his voice had been cloned or otherwise misused on the ElevenLabs platform to create the audio files in question, those safeguards would have been activated.

To get expert analysis on the video, we escalated it to the Global Online Deepfake Detection System (GODDS), a detection system set up by Northwestern University’s Security & AI Lab (NSAIL). The video was analysed by two human analysts, run through 22 deepfake detection algorithms for video analysis, and seven deepfake detection algorithms for audio analysis.

Of the 22 predictive models, 13 gave a higher probability of the video being fake and the remaining nine gave a lower probability of the video being fake. All the seven predictive models gave a high confidence score to the audio being fake.

In their report, the team corroborated our observation about the subject’s voice lacking natural tonal and cadence variations that are characteristic of human voices. They highlighted several timecodes throughout the video, that seemingly show the subject missing previously visible teeth, or how the teeth disappear momentarily before reappearing; observations we too pointed to above.

They also identified a specific timecode where the subject’s lip colour abruptly changes to a noticeably lighter shade; and also highlighted how at a particular point in the video the subject’s teeth seem disconnected from the lower jaw creating an unnatural appearance. In conclusion, the team stated that the video is likely generated via artificial intelligence.

The team at GetReal Security also shared their expert analysis on the video. They noted that their audio models detected signs of synthesis in the audio track.

They added that the lips of the subject move faster than expected for the syllables that are heard. However, they clarified that they did not detect any anomalies in the facial analysis, so, it is unlikely that the video was created by replacing the subject’s face using a tool.

As pointed above they identified the specific clip in the source video which matched with the visuals in the doctored video. They pointed to the hand and head motions being a perfect match. They explained that an A.I.-driven avatar created from an image would not precisely follow these motions; it would instead generate its own interpretation of how the person should move, which would be discernible looking at the source and manipulated videos side-by-side.

They shared an overlay of the doctored video and the matching clip to illustrate the differences in the mouth movements between the two.

They stated that significant differences are only in the mouth region, indicating that was the part of the original that was altered.

However, they pointed out that the YouTube video (which we link to above) traced as the source, may be of lower quality than the version used to create the doctored video. They further noted that the video displays a lot of interlacing artefacts and edge softening, indicating that it may have been taken from an analog source.

In the visual above a frame-by-frame comparison of the clips highlights how the two clips are not an exact match. The darker portions indicate the similarity between frames and the lighter areas are the pixels that indicate differences. The comparison also illustrates how the mouth area of Singh is most affected by the tools used by the creators of the doctored video.

The GetReal Security team also noted that if the version that we identified as the source video had been used by the video creators, then the edge outline and motion or interlacing artefacts, as seen in the visual above, would be much less prominent in the difference between the frames.

They added that if the same comparison had been made using the original digital source then the entire screen would be near dark except for the manipulated area of the mouth.

On the basis of our findings and expert analyses, we can conclude that the video was fabricated using an A.I.-generated audio clip with Singh’s original video. A false narrative was peddled about the Indian Air Force having lost fighter aircraft in the recent India-Pakistan conflict.

(Written by Debopriya Bhattacharya and Debraj Sarkar, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.

You can read the fact-checks related to this piece published by our partners: