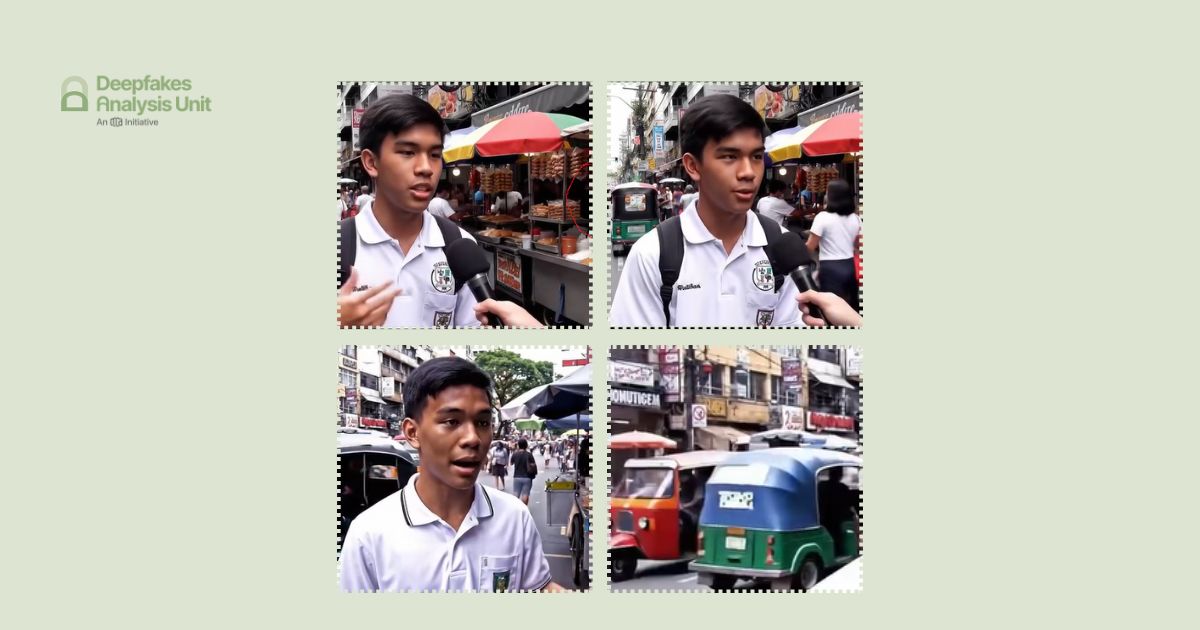

Picture this: a 29-second video featuring three separate street interviews of young boys in distinct crisp white T-shirts bearing crests typical of school uniforms. In a mix of English and Tagalog, a language widely spoken in the Philippines, they express their opinions about the impeachment of Sara Duterte, Vice President of the Philippines.

They sound upset.

There is a flurry of activity around these students, with traffic slowly moving behind them, food carts lining up on the side with hanging lights, and passers by in motion.

A closer look at the video suggests that the setting of the interviews could be a commercial hub, perhaps somewhere in Asia. Old low-rise buildings stand adjacent to each other with overhead wires clustered on lamp posts and billboards jutting out displaying alphabets in English, their arrangements mostly unintelligible. Store boards are equally cryptic.

As the last boy stops talking, dramatic music kicks in and a black screen appears with the words : “You want justice but your justice is selective,” marking the end of the video.

If you have stayed with us so far, thank you! If you are wondering why are we telling you about a seemingly innocuous video? Hint: read the headline.

On a busy afternoon when VERA Files, a fact-checking organisation in the Philippines, reached out to us to seek an analysis on the authenticity of the video, we were perplexed at first —the video looked very real. The detailing also seemed convincing. So what could have been possibly wrong? The devil does indeed lie in the details!

For the eyes only

When we started scrutinising each frame by slowing down the video and zooming-in to check for anomalies, we spotted quite a few.

The eye movement, especially the blinking of the student featured at the end, looks unnatural. The lower set of teeth of the other two boys seem to change shape and even lose definition in some frames, resembling a white patch. For one of them, the teeth seem to bend along the curve of the lower lip.

And then there is that all too shiny quality to the visuals. Everything looks just a little too polished, as if the video had been through some sort of a digital spa.

We spotted the word “Veo” emblazoned on the lower right corner of the video frame, which was a big clue. The font and colour of that word matched exactly with the logo of Veo, Google’s generative A.I. model which creates high-quality and hyperrealistic videos from text prompts.

As per a post published on the Keyword, Google’s official blog, on May 30, 2025, all the A.I.-generated content originating from their tools is supposed to be watermarked, except for a certain category of videos generated using Flow, an A.I. tool for filmmakers.

We are not sure whether the video in question was generated using Veo. However, it was escalated to us after May 30 and it is unlikely that the exact same logo could have been used with such precision in the video, if it was generated using a different tool.

Our eyes noticed other details as we focussed on the settings in the video. Oddly enough there were no numbers imprinted on the number plates of the vehicles.

The forearm and hands of a food cart vendor become visibly disfigured as they appear to be in motion. Another vendor seems to lose his arm as a man brushes past him. Yet another, rather strange, occurrence that caught our attention was that at one point as two passers by cross each other they somehow end up sharing an arm. You read that right!

But don’t just take our word for it, see the GIFs below.

We do not have in-house expertise in Tagalog, so it was difficult for us to identify any obvious inconsistencies in the speech of the boys, especially when it came to the diction, which can sometimes be a giveaway.

Let the tools do their thing

We were convinced that the video had elements of A.I. given the string of unusual visual aspects that we spotted. But, we were particularly curious about the audio. The next logical step was to run the video through A.I. detection tools, which of course is an integral part of our verification workflow.

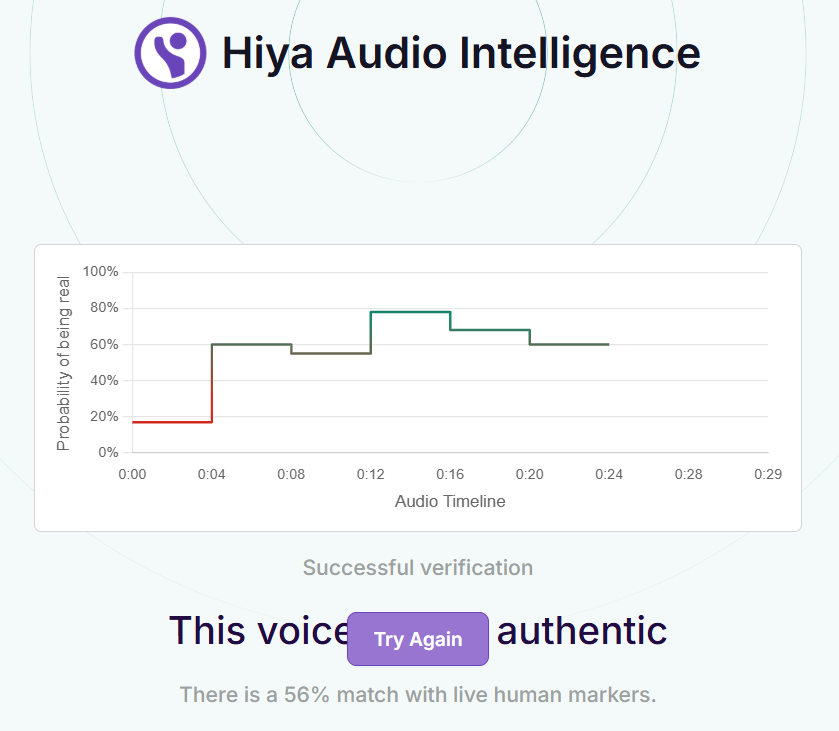

We started with the audio analysis. The voice tool of Hiya, a company that specialises in A.I. solutions for voice safety, gave a 44 percent probability of the audio being fake.

The A.I. speech classifier of ElevenLabs, a company specialising in voice A.I. research and deployment, indicated that it was “very unlikely” that the audio track was created using their platform. That does not rule out the possibility of the audio being synthetic. It could have been made using a different tool or platform.

Hive AI’s audio detection tool highlighted about 9 seconds of the audio track as A.I.-generated and the remaining 20 seconds of the audio did not have any A.I. elements as per the tool results.

The audio analysis from the tools was not convincing but it did tell us that there was a good chance that the audio track has some synthetic elements.

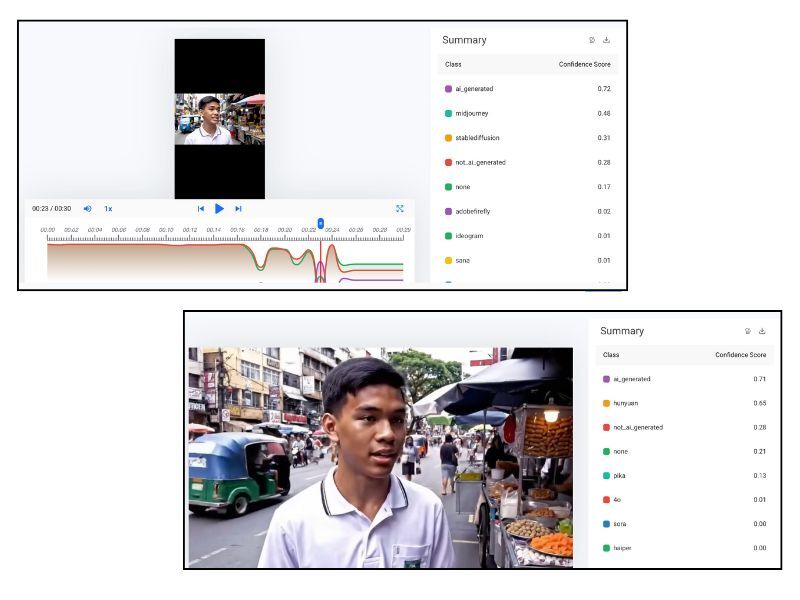

We also ran the visuals through Hive’s deepfake video detection tool but it strangely found no marker of A.I. throughout the video track. We used another classifier from Hive and ran keyframes, screenshots, and the entire 29-second video to further investigate the visuals.

The results this time gave a high confidence score — .72 out of 1— indicating that at least one segment of the video is A.I.-generated. The tool flagged that the visuals were likely created using Midjourney, Stable Diffusion, Pika, and Hunyuan — popular A.I. video generators.

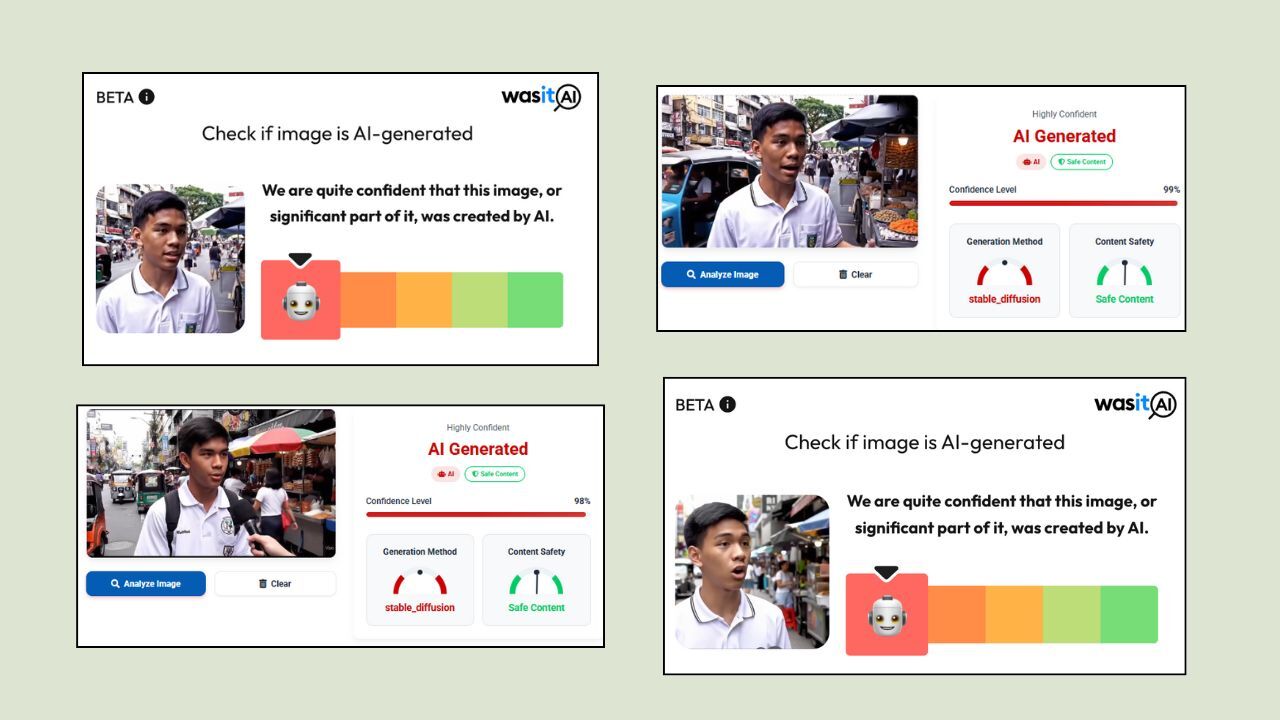

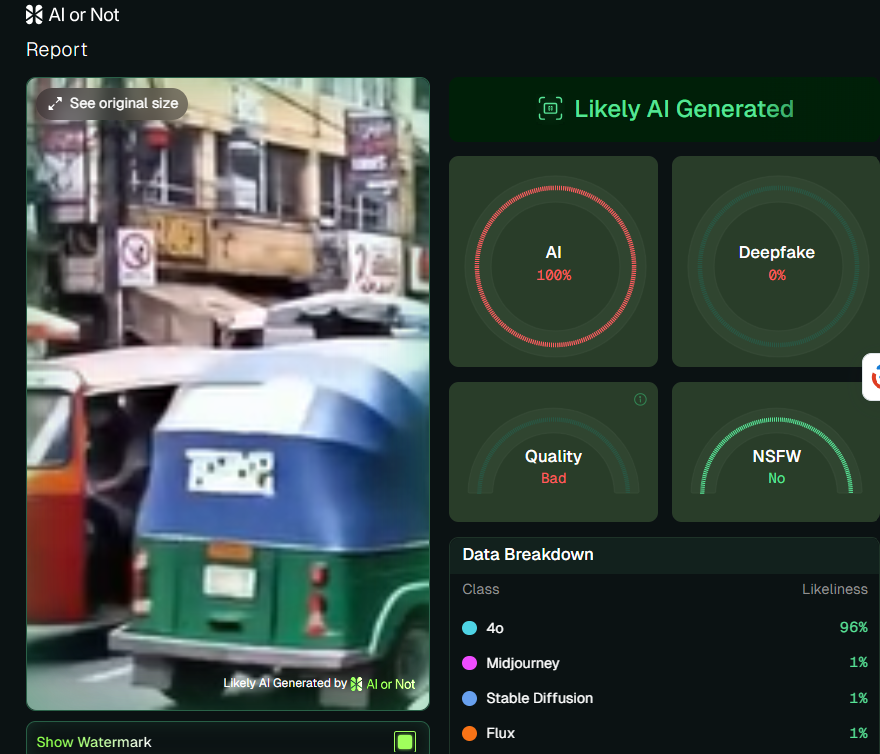

Our investigation did not stop there. We also ran the still images from the video through other free image detection tools, including WasitAI, IsitAI, and AIorNot. All of them pointed to the use of A.I. in the frames that showed the students’ faces.

Detection tools are helpful—they scan for telltale signs and give us probability scores—but they don’t always explain what is actually wrong with a piece of content. That is why we dig deeper. Our approach is pretty simple: watch the video like a detective, and look for the little things that don’t add up.

Bottomline: you cannot beat a careful human eye.

Au revoir!

If you are an IFCN signatory and would like for us to verify harmful or misleading audio and video content that you suspect is A.I.-generated or manipulated using A.I., send it our way for analysis using this form.

(Written by Debraj Sarkar and Debopriya Bhattacharya, edited by Pamposh Raina.)

Kindly Note: The manipulated audio/video files escalated to us for analysis are not embedded here because we do not intend to contribute to their virality.