The Deepfakes Analysis Unit (DAU) analysed a video that apparently shows Lt. Gen. Rahul R. Singh, India’s deputy army chief, supposedly making a statement about India losing two S-400 missile systems to Pakistan. After putting the video through A.I. detection tools and getting our expert partners to weigh in, we were able to conclude that an A.I.-generated audio clip was spliced with real audio to manipulate the video.

The one-minute-and-29-second video in English was discovered by the DAU through social media monitoring on X, formerly Twitter, on more than one account. The accompanying text with the video, also in English, posted by one such account on July 4 read: "Another confession! Indian Deputy Army Chief Lt Gen Rahul Singh admits that India was currently in negotiations with Russia to get two more S-400 systems as two S-400 were lost on 10 May when Pakistan launched Marka e Haq in response to India’s Operation Sindoor."

We do not have any evidence to suggest if the video originated from any of the accounts on X or elsewhere.

The fact-checking unit of the Press Information Bureau, which debunks misinformation related to the Indian government recently posted a fact-check from their verified handle on X, debunking the video attributed to General Singh. The S-400 air defence missile systems were deployed by India during the military escalation with Pakistan in May 2025. According to news reports Russia will deliver additional S-400 systems to India by 2026 as part of a deal through which India already has three of those systems in use.

In the video, the general has been captured in a medium close-up while standing, giving the impression that he is addressing a gathering. Even as his hands move in an animated manner, his face and eyes seem to be oddly focussed toward his right. His head movements and upper body language seem especially stiff.

The backdrop in part comprises a greenish wall and the other half carries a tile arrangement with a logo resembling that of FICCI or the Federation of Indian Chambers of Commerce and Industry, India’s leading business body. A logo resembling that of DNA, an Indian digital news publication, is visible in the top right corner of the video frame.

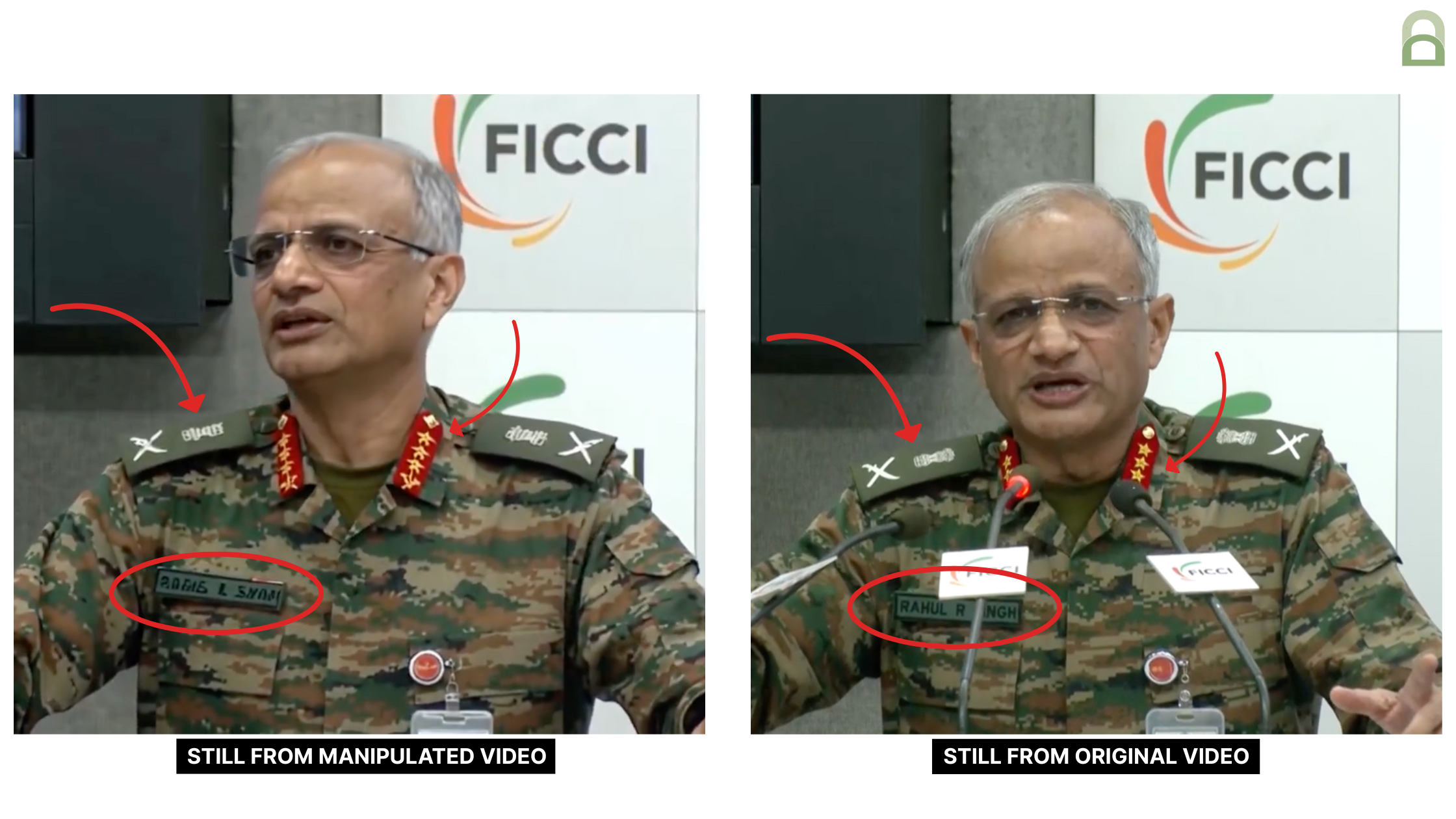

The general’s lip movements are not perfectly in sync with the audio attributed to him. At several instances there seems to be a slight lag between the audio and video. In some frames his upper set of teeth seem to unnaturally blend into his upper lip as he appears to speak. Some garbled characters are visible on his uniform in place of his name tag, and unidentifiable military regalia, which changes shape, is also visible on his epaulettes instead of the Indian national emblem; all these seem to be signs of manipulation.

On comparing the voice attributed to the general with his recorded address available online, the striking similarity in the voice, accent, and diction, are hard to miss. However, there’s a 14-second audio segment in the video which has an echo and also a peculiar noise that somewhat resembles human breathing; neither of these sounds are heard elsewhere in the video.

We undertook a reverse image search using screenshots from the video and traced the general’s clip to this video published on July 4, 2025 from the official YouTube channel of DNA India News.

His backdrop and clothing are identical in the video under review as well as the one we traced but for the nametag and the epaulettes on his uniform, which are clearly visible with the Indian Army insignia in the video we traced.

Microphones visible in the foreground and the text graphics detailing the general’s name, the location of the venue from where he spoke are part of the source video but not the doctored video. Two different logos of DNA are visible in the top right and bottom right corner of the video frame in the source video while only one logo was spotted in the doctored video.

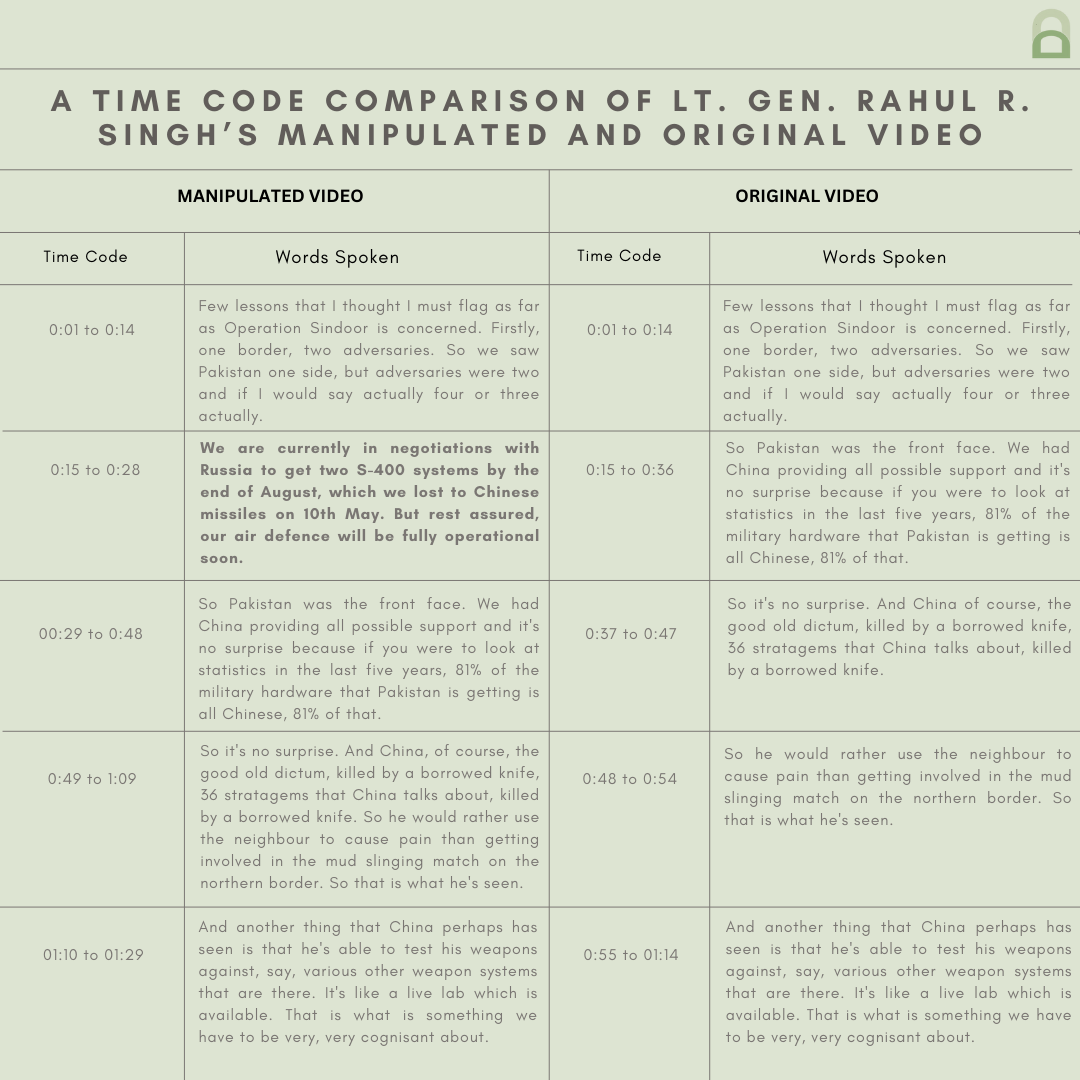

Most of the audio heard in the doctored video has been taken from the source video which too is in English. The 14-second segment with a peculiar sound that we pointed to above is the only portion which is nowhere to be found in the source video.

When we compared the video track of the manipulated video with the video track over the same audio segments in the source video we observed that the general’s body language and gestures are completely different. We further noticed that the video track in the doctored video does not have any visible jump cuts or transitions and looks fairly seamless.

These observations suggest that despite the bulk of the audio track being the same in both sets of videos, it is highly likely that the manipulated video was not created by lifting the same video segments accompanying those audio tracks in the source video. Instead, it appears that a different clip from the source video has been lifted and synced with an audio track produced by stitching together portions of the original audio track and a small synthetic audio track.

We recently debunked a similar video which too had been manipulated using an audio track produced by combining a small A.I.-generated audio track with the original audio of Dr. Subrahmanyam Jaishankar, India’s External Affairs Minister. That video, too, peddled a fake narrative about India losing combat aircraft to Pakistan during the military escalations.

To give our readers a sense of how such false narratives are being spun, we have shared below a table that compares the audio tracks from the original video featuring the general with the manipulated one. By no means do we want to give any oxygen to the bad actors behind this misleading and harmful video.

To discern the extent of A.I. manipulation in the video under review, we put it through A.I. detection tools.

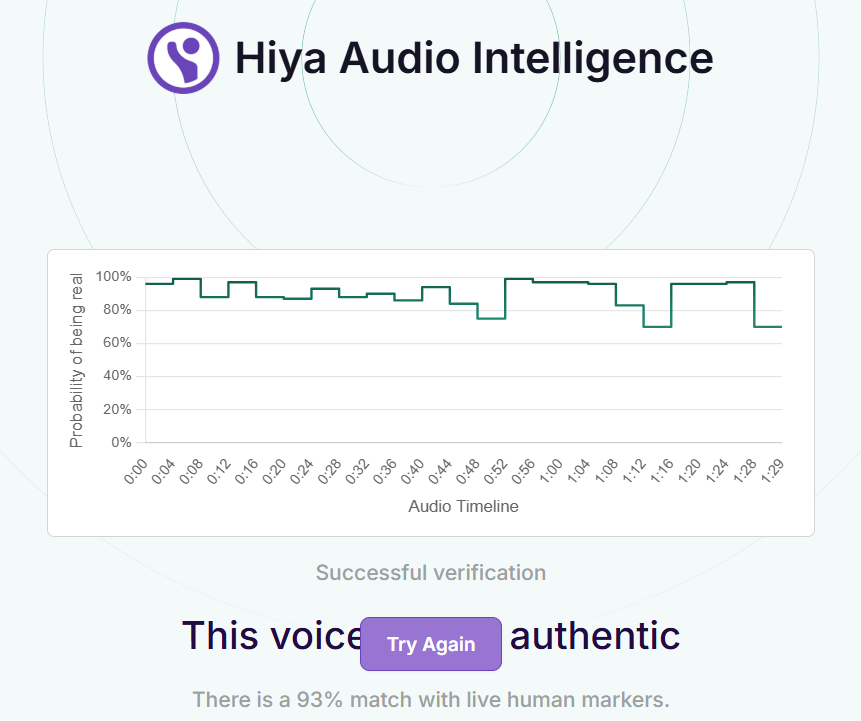

The voice tool of Hiya, a company that specialises in artificial intelligence solutions for voice safety, indicated that there is a seven percent probability of the audio track in the video having been generated or modified using A.I.

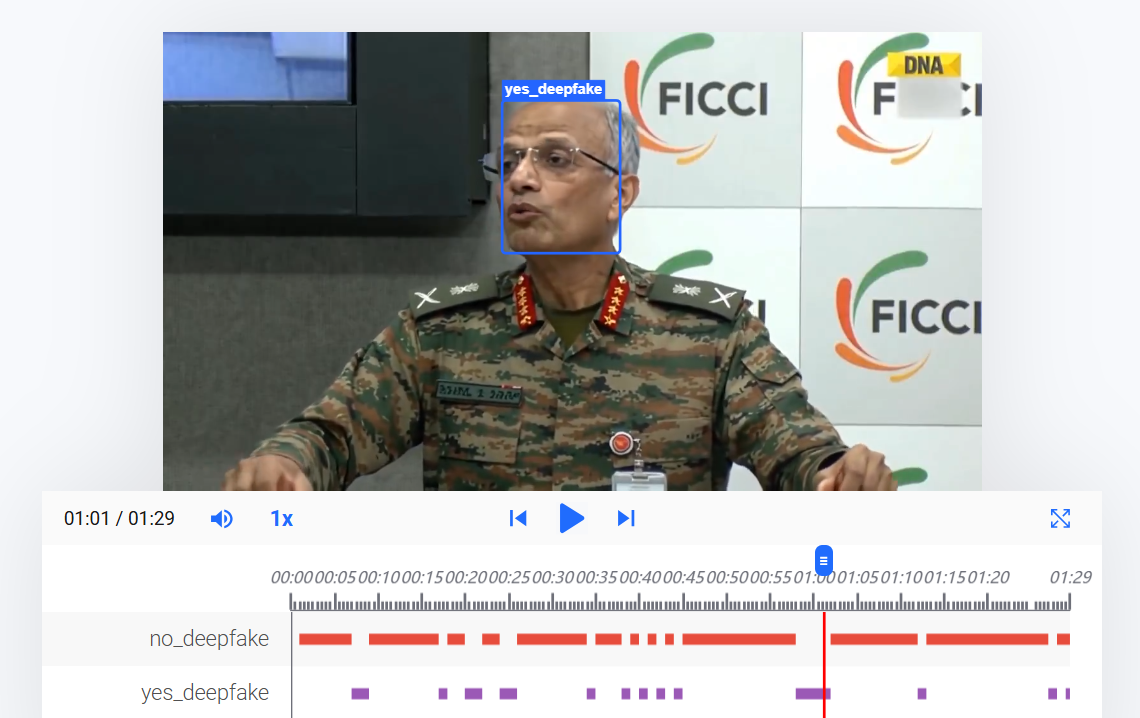

Hive AI’s deepfake video detection tool highlighted markers of A.I. manipulation in several segments of the video track. However, their audio detection tool did not indicate that any portion of the audio track was A.I.-generated.

We also ran the audio track through the A.I. speech classifier of ElevenLabs, a company specialising in voice A.I. research and deployment. The results that returned indicated that it was “very unlikely” that the audio track used in the video was generated using their platform.

When we reached out to ElevenLabs for a comment on the analysis, they told us that they were unable to conclusively determine that the synthetic audio originated from their platform. They noted that the general is already included in their safeguard systems to help prevent unauthorised cloning or misuse of his voice, and that if his voice had been cloned or otherwise misused on the ElevenLabs platform to create the audio files in question, those safeguards would have been activated.

To get further analysis on the video we reached out to ConTrails AI, a Bangalore-based startup with its own A.I. tools for detection of audio and video spoofs.

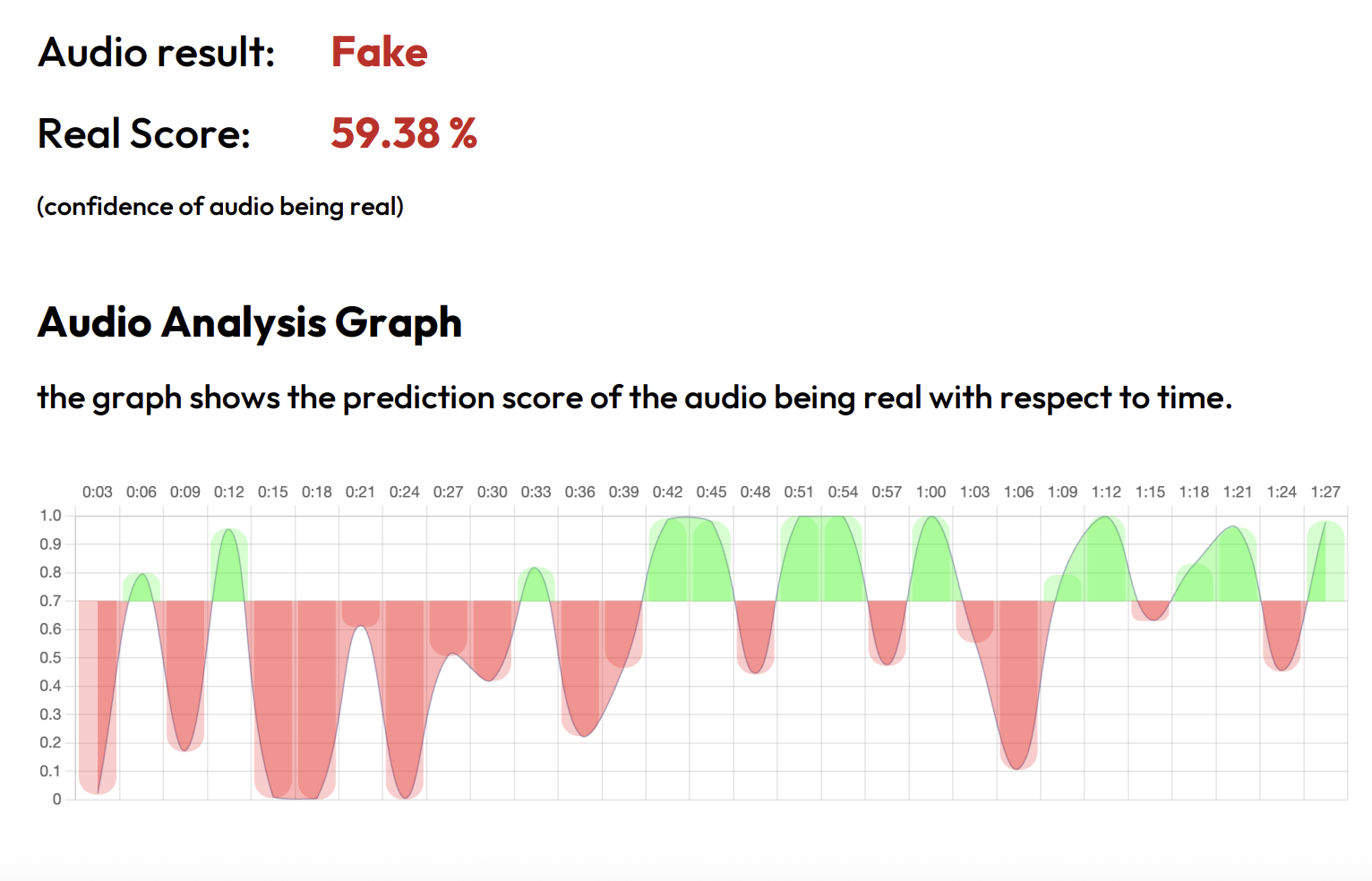

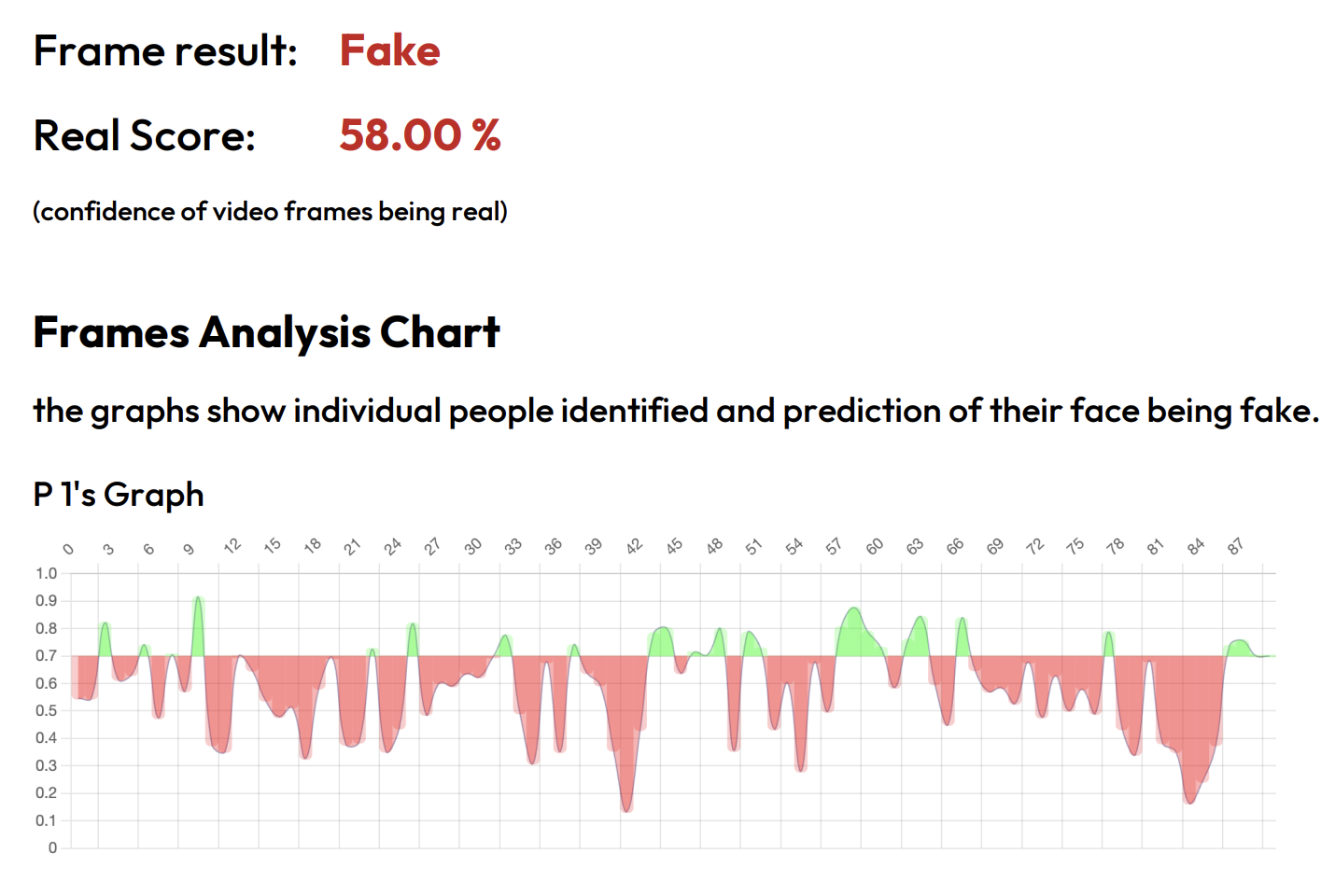

The team ran the video through audio as well as video detection models, the results that returned suggested manipulation in both the video and the audio track. They noted that the unnatural lip movements in the video indicate lip-sync manipulation.

Their default audio A.I. model flagged the entire audio track as A.I.-manipulated, at first. They attribute that to the artificial timbre or sound quality that they noticed in the original audio track, which has been used to create the audio track in the doctored video as we have pointed above. According to them, multiple microphones visibly used in the original video for the audio recording could have contributed to the artificial quality of the sound.

However, after they reran the audio track through their proprietary low resolution A.I. detector, they were able to establish that a roughly 15-second audio segment was manipulated.

The Contrails team added that the voice in the audio track sounds very similar to the general’s and suggested that voice cloning methods were used to create the audio track. They noted that the quality of manipulation in the video is good and that’s the reason the confidence score for the video analysis is low as indicated by the graph below.

We also reached out to our partners at RIT’s DeFake Project to get expert analysis on the video. Kelly Wu from the project noted that it was very hard to identify the fake artefacts in the video track at first glance and that it was the name tag that caught her attention. Echoing our observations, she highlighted that the name tag in the doctored clip shows random characters, in contrast to the real video where the tag shows the name.

Ms. Wu further corroborated our analysis, as she pointed to the differences in the general’s uniform in the two sets of videos, including the patterns on the uniform. She also noted that his collar has three stars in the real video track as opposed to four in the doctored one. She pointed to a small black horizontal line visible in the backdrop, on the wall behind the general, in the source video but it’s missing in the doctored video.

The RIT team transcribed both the real and doctored videos and confirmed our observations as they noted that an audio segment in the video is generated and it has been spliced into the real video to change the narrative.

Saniat Sohrawardi from the project further confirmed our observations as he pointed to the differences in the hand and head movements in video tracks with the matching audio snippets in the two sets of videos. He said that their team is not sure of the type of model that was used to create this video, adding that they have not seen open source models that could achieve these results.

Mr. Sohrawardi also acknowledged that a piece of audio involving the Russian S-400 purchase was added to the audio track. He added that this bit may have been generated as his tone changes to a little more static and monotonous as opposed to the speech before and after. He highlighted this to be a new type of fake alongside the lip-sync deepfakes.

Comparing the general’s body language in the doctored clip with that in the original, he stated that the officer seems more fidgety in the original, where his arms move around more and touch microphones every now and then.

On the basis of our findings and expert analyses, we can conclude that synthetic audio was added to the general’s real audio to peddle a false narrative about India losing S-400 missile systems to Pakistan in the recent India-Pakistan conflict.

(Written by Debopriya Bhattacharya and Debraj Sarkar, edited by Pamposh Raina.)

Kindly Note: The manipulated video/audio files that we receive on our tipline are not embedded in our assessment reports because we do not intend to contribute to their virality.

You can read below the fact-checks related to this piece published by our partners:

Did Army Deputy Chief ‘Admit’ To India Losing S-400s During Conflict With Pakistan?

Edited Video Falsely Claims India Lost 2 S-400s in Pakistan Conflict